Say, i have some dask dataframe. I'd like to do some operations with it, than save to csv and print its len.

As I understand, the following code will make dask to compute df twice, am I right?

df = dd.read_csv('path/to/file', dtype=some_dtypes)

#some operations...

df.to_csv("path/to/out/*")

print(len(df))

It is possible to avoid computing twice?

upd.

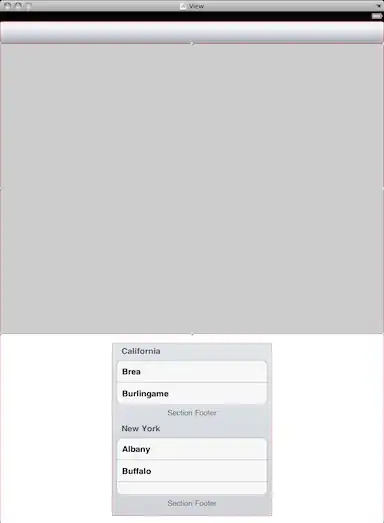

That's what happens when I use solution by @mdurant

but there are really almost 6 times less rows