I am using 3-node spark cluster to read data from 1-node mongodb and index into 3-node elasticsearch cluster. Each node of spark cluster and elasticseach has 16GB ram and 4 cores. I am using pyspark.

By following case 1 of this link, I have 2 executors and each executor got 3 cores and 7520MB memory. Spark driver got 4000MB and driver.memoryOverhead is 800MB.

MongoDB collection of size upto 400GB (around 100,000 spark partitions) is being indexed perfectly. But for more than that (tested for 600GB and more collections) I am facing Full GC loop and finally OutOfMemoryError: GC overhead limit exceeded.

My spark code is:

rdd = sc.mongoRDD('mongodb://user:pass@ip:port/db.coll?authSource=admin_db)

def include_id(doc):

doc["mongo_id"] = str(doc.pop('_id', ''))

doc["ip"] = m_ip_port

return doc

def it_projection(doc_it):

projection_list = m_projection

for doc in doc_it:

projected_doc = {i: doc.get(i) for i in m_projection = ['_id', 'key1', 'key2', 'key3']}

yield projected_doc

json_rdd = rdd.mapPartitions(it_projection).map(include_id).map(json.dumps).map(lambda x: ('key', x))

json_rdd.saveAsNewAPIHadoopFile(

path='-',

outputFormatClass="org.elasticsearch.hadoop.mr.EsOutputFormat",

keyClass="org.apache.hadoop.io.NullWritable",

valueClass="org.elasticsearch.hadoop.mr.LinkedMapWritable",

conf={

"es.nodes" : e_ip_port,

"es.resource" : e_index + '/' + e_type,

"es.input.json": "true"

}

)

Solutions I tried: I increased executor memory to 11GB but no effect. I increased driver memory to 8GB but no effect. I set num_of_executors to 5 but no effect.

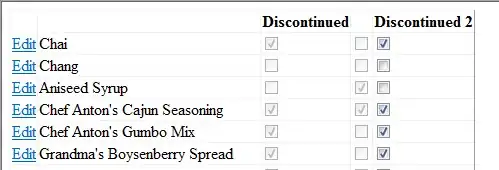

Finally I decided to do driver heap analysis using JHAT. I collected 2 Java heaps, one while program was healthy and another when program was in Full GC loop. The top 10 rows of heap histogram are shown below:

I observed that class org.apache.spark.scheduler.AccumulableInfo has increased significantly in size as well as count.

AccumulableInfo contains information about a task’s local updates to an Accumulable. Accumulator are required by driver to keep count of how many partitions have finished execution. It seems we need them.

So my question is what am I doing wrong? What can I do index this huge data into ES using Spark? Should I give more resources to spark? I want to use it for a MongoDB collection with few TBs of data.