Where X^_JW:

and delta_R_LV:

Where:

-All uppercase deltas and epsilon are constants

-R_WV is a 3,3 rotation matrix with 1000 samples

-R^_LV is a 3,3 constant rotation matrix

-I is a 3,3 rotation matrix

-Delta_R_LV is a 3,3 matrix we wish to solve for

-X_JLI is a 3,1 vector with 1000 samples

-T_LV is a 3,1 vector we wish to solve for

-T_VW is a 3,1 vector with 1000 samples

-X*_JW is a 3,1 vector with 1000 samples

I am having trouble understanding how to fit the 3,3 matrices with 1000 samples into a 2d form that would make sense to optimize upon. My idea was to flatten over the last dimension in order to then have matrices of dimension 1000,9 but I don't understand how these matrices could operate on a 3,1 vector.

I understand how the examples work for a vector of samples of dim (N,1) and how to turn something like this into a matrix via the example:

objective = cp.Minimize(cp.sum_squares(A*x - b))

constraints = [0 <= x, x <= 1]

prob = cp.Problem(objective, constraints)

# The optimal objective value is returned by `prob.solve()`.

result = prob.solve()

# The optimal value for x is stored in `x.value`.

print(x.value)

# The optimal Lagrange multiplier for a constraint is stored in

# `constraint.dual_value`.

print(constraints[0].dual_value)

x = cp.Variable((1275,3))

objective = cp.Minimize(cvx.sum_squares(A*x - b))

constraints = [0 <= x, x <= 1]

prob = cvx.Problem(objective, constraints)

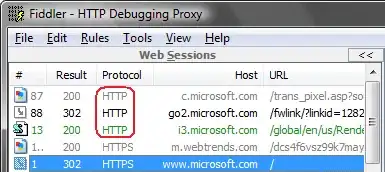

There is also another example which may be closer to my problem in this link:

http://nbviewer.jupyter.org/github/cvxgrp/cvx_short_course/blob/master/intro/control.ipynb