I have been working on a some image segmentation tasks lately and would like to apply one from scratch.

Segmentation as I have understood is the per pixel prediction to where it belongs - to an object instance(things), to a background segment instance(stuff).

As per the COCO dataset on which the latest algorithm Mask RCNN is based :

things are countable objects such as people, animals, tools. Stuff classes are amorphous regions of similar texture or material such as grass, sky, road.

As per the Mask Rcnn paper the final classification is a binary cross entropy loss function taking per pixel sigmoid (to avoid intra-class race). This pipeline is based on top of the FRCNN object detection pipeline from where it gets the Region-Of-Interest (roi) and passes them through a ROI-align class to keep the spatial information intact.

What I'm confused with is the following. Given a very simple code snippet below, for applying Binary Cross Entropy loss to separate 3 fully connected layers( some random experiment with scales):

class ModelMain(nn.Module):

def __init__(self, config, is_training=True):

super(ModelMain, self).__init__()

self.fc_1 = torch.nn.Linear(incoming_size_1, outgoing_size_1)

self.fc_2 = torch.nn.Linear(incoming_size_2, outgoing_size_2)

self.fc_3 = torch.nn.Linear(incoming_size_3, outgoing_size_3)

def forward(self, x):

y_1 = F.sigmoid(self.fc_1(x))

y_2 = F.sigmoid(self.fc_2(x))

y_3 = F.sigmoid(self.fc_3(x))

return y_1, y_2, y_3

model = ModelMain()

criterion = torch.nn.BCELoss(size_average = True)

optimizer = torch.optim.SGD(model.parameters(), lr = 0.01)

def run_epoch():

batchsize = 10

for epoch in range(batchsize):

# Find image segment predicted by running forward pass:

y_predicted_1, y_predicted_2, y_predicted_3 = model(batch_data_x)

# Compute and print loss :

loss_1 = criterion(y_predicted_1, batch_data_y)

loss_2 = criterion(y_predicted_2, batch_data_y)

loss_3 = criterion(y_predicted_3, batch_data_y)

print( "Epoch ", epoch, "Loss : ", loss_1, loss_2, loss_3)

# Perform Backward pass :

optimizer.zero_grad()

loss_1.backward()

loss_2.backward()

loss_3.backward()

optimizer.step()

... what exactly do we provide here as label?

From the dataset :

Formatted JSON Data

image :

{

"license":2,

"file_name":"000000000139.jpg",

"coco_url":"http://images.cocodataset.org/val2017/000000000139.jpg",

"height":426,

"width":640,

"date_captured":"2013-11-21 01:34:01",

"flickr_url":"http://farm9.staticflickr.com/8035/8024364858_9c41dc1666_z.jpg",

"id":139

}

Segment info :

{

"segments_info":[

{

"id":3226956,

"category_id":1,

"iscrowd":0,

"bbox":[

413,

158,

53,

138

],

"area":2840

},

{

"id":6979964,

"category_id":1,

"iscrowd":0,

"bbox":[

384,

172,

16,

36

],

"area":439

},

{

"id":3103374,

"category_id":62,

"iscrowd":0,

"bbox":[

413,

223,

30,

81

],

"area":1250

},

{

"id":2831194,

"category_id":62,

"iscrowd":0,

"bbox":[

291,

218,

62,

98

],

"area":1848

},

{

"id":3496593,

"category_id":62,

"iscrowd":0,

"bbox":[

412,

219,

10,

13

],

"area":90

},

{

"id":2633066,

"category_id":62,

"iscrowd":0,

"bbox":[

317,

219,

22,

12

],

"area":212

},

{

"id":3165572,

"category_id":62,

"iscrowd":0,

"bbox":[

359,

218,

56,

103

],

"area":2251

},

{

"id":8824489,

"category_id":64,

"iscrowd":0,

"bbox":[

237,

149,

24,

62

],

"area":369

},

{

"id":3032951,

"category_id":67,

"iscrowd":0,

"bbox":[

321,

231,

126,

89

],

"area":2134

},

{

"id":2038814,

"category_id":72,

"iscrowd":0,

"bbox":[

7,

168,

149,

95

],

"area":13247

},

{

"id":3289671,

"category_id":72,

"iscrowd":0,

"bbox":[

557,

209,

82,

79

],

"area":5846

},

{

"id":2437710,

"category_id":78,

"iscrowd":0,

"bbox":[

512,

206,

15,

16

],

"area":224

},

{

"id":4159376,

"category_id":82,

"iscrowd":0,

"bbox":[

493,

174,

20,

108

],

"area":2056

},

{

"id":3423599,

"category_id":84,

"iscrowd":0,

"bbox":[

613,

308,

13,

46

],

"area":324

},

{

"id":3094634,

"category_id":84,

"iscrowd":0,

"bbox":[

605,

306,

14,

45

],

"area":331

},

{

"id":3296100,

"category_id":85,

"iscrowd":0,

"bbox":[

448,

121,

14,

22

],

"area":227

},

{

"id":6054280,

"category_id":86,

"iscrowd":0,

"bbox":[

241,

195,

14,

18

],

"area":187

},

{

"id":5942189,

"category_id":86,

"iscrowd":0,

"bbox":[

549,

309,

36,

90

],

"area":2171

},

{

"id":4086154,

"category_id":86,

"iscrowd":0,

"bbox":[

351,

209,

11,

22

],

"area":178

},

{

"id":7438777,

"category_id":86,

"iscrowd":0,

"bbox":[

337,

200,

10,

16

],

"area":120

},

{

"id":3031159,

"category_id":118,

"iscrowd":0,

"bbox":[

0,

269,

564,

157

],

"area":49754

},

{

"id":9284267,

"category_id":119,

"iscrowd":0,

"bbox":[

338,

166,

29,

50

],

"area":842

},

{

"id":6068135,

"category_id":130,

"iscrowd":0,

"bbox":[

212,

11,

321,

127

],

"area":3391

},

{

"id":2567230,

"category_id":156,

"iscrowd":0,

"bbox":[

129,

168,

351,

162

],

"area":5699

},

{

"id":10334639,

"category_id":181,

"iscrowd":0,

"bbox":[

204,

63,

234,

174

],

"area":15587

},

{

"id":6266027,

"category_id":186,

"iscrowd":0,

"bbox":[

136,

0,

473,

116

],

"area":20106

},

{

"id":5274512,

"category_id":188,

"iscrowd":0,

"bbox":[

0,

38,

549,

297

],

"area":25483

},

{

"id":7238567,

"category_id":189,

"iscrowd":0,

"bbox":[

457,

350,

183,

76

],

"area":9421

},

{

"id":4224910,

"category_id":199,

"iscrowd":0,

"bbox":[

0,

0,

640,

358

],

"area":83201

},

{

"id":6391959,

"category_id":200,

"iscrowd":0,

"bbox":[

135,

359,

336,

67

],

"area":12618

}

],

"file_name":"000000000139.png",

"image_id":139

}

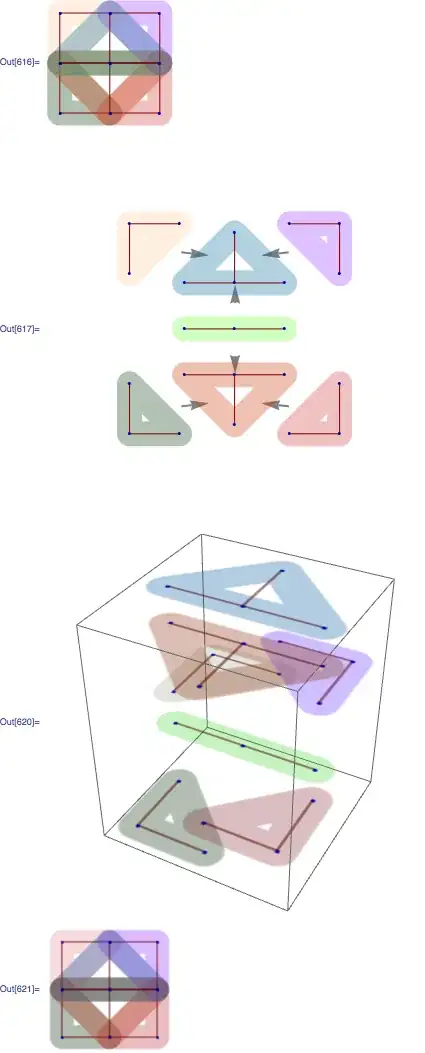

The Mask image :

For the object detection task we have bounding box, but for image segmentation I need to calculate loss with the mask provided.

So what should be the value for the batch_data_y in the above code.

Will it be the vector for the mask image. But doesn't that train my network as to what color some segment is ? Or am I missing some other segment annotation ?