I have an AWS EC2 instance with DUAL-CORE and 4 GB Memory. I have setup my Apache2 HTTP server running PHP "7.0.30" and MySQL "Ver 14.14 Distrib 5.7.22".

There are various devices that are sending GET/POST request to my Http server. Each post and get request using select and update queries.

Right now, there are around 200 devices which are hitting my Http server simultaneously and hitting SQL queries of select and update together. These hits contain data in JSON formats.

The problem is that my MYSQL server has become too much slow. It takes long time to gather data from select queries and load pages.

From phpMyAdmin, I see a number of sleep processes in status for queries. I also have tuned various parameters of my SQL server but no result.

One of the major query that is taking time is update query which is updating long text data in table and is coming from device in every 60 seconds simultaneously and we see its processes empty after a long period of time in MYSQL server status.

Is there a way to optimize it using SQL parameters to keep MYSQL server fast even with 1000s of queries with multiple connections coming to update the table column having long text ?

Most of the Global variables are with default values. I also tried changing values of Various Global variables but it didn't produce any result.

How can I reduce this slow processing of queries?

P.S: I believe the issue is due to Update queries. I have tuned Select queries and they seems fine. But, for UPDATE queries, I see sleep of upto 12 seconds in Processes tab of phpMyAdmin.

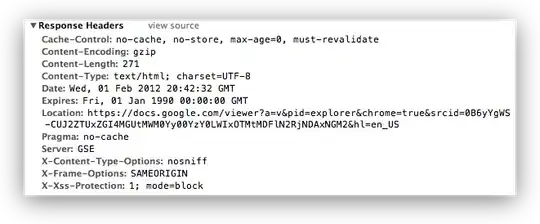

I have added link to the image having this issue (Here, you can see sleeps of even 13 seconds, all in UPDATE queries) :

Here is the PasteBin for the query of an UPDATE operation: https://pastebin.com/kyUnkJmz