I am trying to replicate the outcome of this link using linear convolution in spatial-domain.

Images are first converted to 2d double arrays and then convolved. Image and kernel are of the same size. The image is padded before convolution and cropped accordingly after the convolution.

As compared to the FFT-based convolution, the output is weird and incorrect.

How can I solve the issue?

Note that I obtained the following image output from Matlab which matches my C# FFT output:

.

Update-1: Following @Ben Voigt's comment, I changed the Rescale() function to replace 255.0 with 1 and thus the output is improved substantially. But, still, the output doesn't match the FFT output (which is the correct one).

.

Update-2: Following @Cris Luengo's comment, I have padded the image by stitching and then performed spatial convolution. The outcome has been as follows:

So, the output is worse than the previous one. But, this has a similarity with the 2nd output of the linked answer which means a circular convolution is not the solution.

.

Update-3: I have used the Sum() function proposed by @Cris Luengo's answer. The result is a more improved version of **Update-1**:

But, it is still not 100% similar to the FFT version.

.

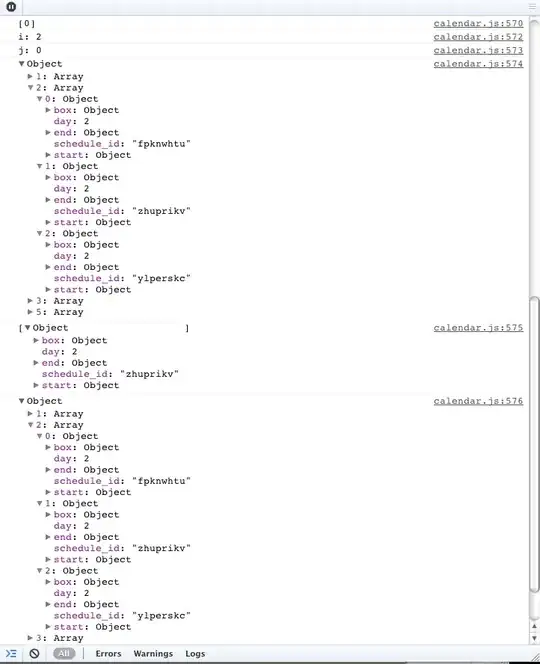

Update-4: Following @Cris Luengo's comment, I have subtracted the two outcomes to see the difference:

,

,

1. spatial minus frequency domain

2. frequency minus spatial domain

Looks like, the difference is substantial which means, spatial convolution is not being done correctly.

.

Source Code:

(Notify me if you need more source code to see.)

public static double[,] LinearConvolutionSpatial(double[,] image, double[,] mask)

{

int maskWidth = mask.GetLength(0);

int maskHeight = mask.GetLength(1);

double[,] paddedImage = ImagePadder.Pad(image, maskWidth);

double[,] conv = Convolution.ConvolutionSpatial(paddedImage, mask);

int cropSize = (maskWidth/2);

double[,] cropped = ImageCropper.Crop(conv, cropSize);

return conv;

}

static double[,] ConvolutionSpatial(double[,] paddedImage1, double[,] mask1)

{

int imageWidth = paddedImage1.GetLength(0);

int imageHeight = paddedImage1.GetLength(1);

int maskWidth = mask1.GetLength(0);

int maskHeight = mask1.GetLength(1);

int convWidth = imageWidth - ((maskWidth / 2) * 2);

int convHeight = imageHeight - ((maskHeight / 2) * 2);

double[,] convolve = new double[convWidth, convHeight];

for (int y = 0; y < convHeight; y++)

{

for (int x = 0; x < convWidth; x++)

{

int startX = x;

int startY = y;

convolve[x, y] = Sum(paddedImage1, mask1, startX, startY);

}

}

Rescale(convolve);

return convolve;

}

static double Sum(double[,] paddedImage1, double[,] mask1, int startX, int startY)

{

double sum = 0;

int maskWidth = mask1.GetLength(0);

int maskHeight = mask1.GetLength(1);

for (int y = startY; y < (startY + maskHeight); y++)

{

for (int x = startX; x < (startX + maskWidth); x++)

{

double img = paddedImage1[x, y];

double msk = mask1[x - startX, y - startY];

sum = sum + (img * msk);

}

}

return sum;

}

static void Rescale(double[,] convolve)

{

int imageWidth = convolve.GetLength(0);

int imageHeight = convolve.GetLength(1);

double maxAmp = 0.0;

for (int j = 0; j < imageHeight; j++)

{

for (int i = 0; i < imageWidth; i++)

{

maxAmp = Math.Max(maxAmp, convolve[i, j]);

}

}

double scale = 1.0 / maxAmp;

for (int j = 0; j < imageHeight; j++)

{

for (int i = 0; i < imageWidth; i++)

{

double d = convolve[i, j] * scale;

convolve[i, j] = d;

}

}

}

public static Bitmap ConvolveInFrequencyDomain(Bitmap image1, Bitmap kernel1)

{

Bitmap outcome = null;

Bitmap image = (Bitmap)image1.Clone();

Bitmap kernel = (Bitmap)kernel1.Clone();

//linear convolution: sum.

//circular convolution: max

uint paddedWidth = Tools.ToNextPow2((uint)(image.Width + kernel.Width));

uint paddedHeight = Tools.ToNextPow2((uint)(image.Height + kernel.Height));

Bitmap paddedImage = ImagePadder.Pad(image, (int)paddedWidth, (int)paddedHeight);

Bitmap paddedKernel = ImagePadder.Pad(kernel, (int)paddedWidth, (int)paddedHeight);

Complex[,] cpxImage = ImageDataConverter.ToComplex(paddedImage);

Complex[,] cpxKernel = ImageDataConverter.ToComplex(paddedKernel);

// call the complex function

Complex[,] convolve = Convolve(cpxImage, cpxKernel);

outcome = ImageDataConverter.ToBitmap(convolve);

outcome = ImageCropper.Crop(outcome, (kernel.Width/2)+1);

return outcome;

}