Building an Encoder-Decoder sequence for image segmentation,That’s to say,I use black background and white foreground to train the whole network.and in test process, it generate nothing but black ]1

[

]1

[ ]2

]2

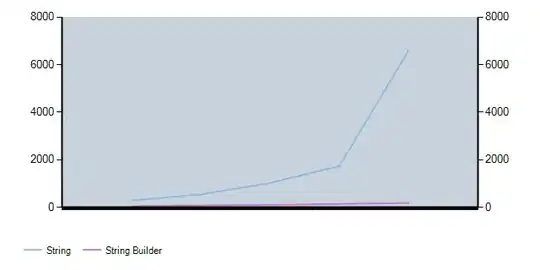

I check the softmax output, find that it does treat the background pixel to 0 channel, so argmax op generate 0 at this pixel.But foreground pixel’s softmax output is even. just(0.5,0.5),so it can’t distinguish,so the argmax chose the natural order 0 to present the class,and generate 0.

So when I use argmax it generate all black image, while the argmin to genrate

reverse result,which means it distinguish the background,but at these even pixels,the network fails. I conjecture that I use a different deconv process.https://distill.pub/2016/deconv-checkerboard/ that’s which inspires me. cause I used to use deconv,but it generate checkerboard artifacts inside the white area.

I conjecture that I use a different deconv process.https://distill.pub/2016/deconv-checkerboard/ that’s which inspires me. cause I used to use deconv,but it generate checkerboard artifacts inside the white area. so I follow the blog to use upsampling layer and a size-keeping conv operation to replace the deconv layer.But it fails,too.WHERE to modify?I really confused, and, a bit upset.

so I follow the blog to use upsampling layer and a size-keeping conv operation to replace the deconv layer.But it fails,too.WHERE to modify?I really confused, and, a bit upset.

the two problem My Image segmentation result map contains black lattice in in the white patch also launched by myself, but it's different.they use different model to generate different result,the former is about deconv layer ,the latter is about checkerboard atrifact and its solution:upsampling,which still fails on my network,cause it produce only black pixels, and unable to categorize white area pixels.