I am trying to fit a curve smoothing function onto a number of my data sets, but I actually need to manually input the guess parameter for the respective lambda, theta, sigma and variables etc for each of such sets, or else it would provide a relatively poor fit.

These leads to two questions: 1)Is there actually a way to program the estimates or get curve_fit to find the best guess parameter to work with?

2)If this is not possible, how can I force curve_fit to work with a given fixed set of guess parameters across different data and have it still produce the best possible result/fit for all?

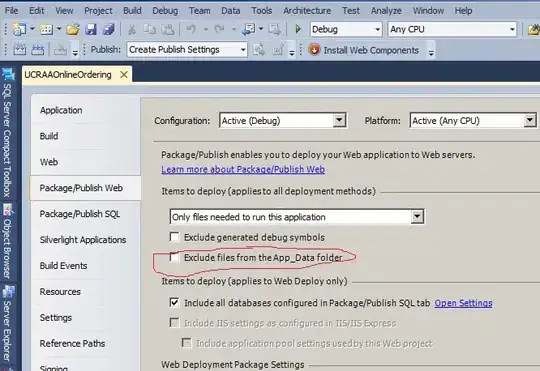

To give a better example/context for the questions, a lambda value of 0.25 for both data sets produced the following fits:-

But set 1 works better with a lambda value of 0.75 (manually altered). Clearly this is a better fit, but because the guess parameter was set to 0.25, this 'better fit' was not found.

The following are my sample codes:-

import matplotlib.pyplot as plt

from scipy.optimize import curve_fit

import numpy as np

rawDataList = [0.76,0.77,0.81,0.84,0.83,0.85,0.77,0.66,0.64,0.72,0.69,0.59,0.74,0.65,0.76,

0.76,0.88,0.75,0.53,0.72,0.53,0.74,0.72,0.62,0.73,0.77,0.74,0.54,0.58,0.70,0.83,0.67,0.84,0.62]

rawDataList_2 = [0.74,0.77,0.75,0.66,0.6,0.63,0.76,0.73,0.56,0.68,0.74,0.56,0.76,0.70,0.72,

0.83,0.76,0.69,0.64,0.68,0.71,0.71,0.61,0.78,0.65,0.61,0.72]

def GaussianSmooth(x, c1, c3, Lambda, theta, sigma):

x0 = 0.

return c1 + c3 * np.cos((2*np.pi*(x/Lambda)) - theta) * np.exp(-(x - x0)**2 / (2 * sigma**2))

## For Binned Data of rawDataList

x = np.arange(len(rawDataList))

x = x*0.06 #Convert x-axis to seconds.

y = np.array(rawDataList)

popt,pcov = curve_fit(GaussianSmooth, x, y, p0=[np.mean(rawDataList),np.max(rawDataList) - np.mean(rawDataList),0.75,0.0,1.5], bounds=((0., 0., 0. ,0., 0.), (1.0, 1.0, 10.0, 10.0, 10.0)), method='trf',maxfev=10000)

plt.xlabel('Time (s)')

plt.ylabel('Performance from 0-100%')

plt.title('Fit for Performance')

plt.plot(x, y, 'b+:', color='blue', label='data')

plt.plot(x, GaussianSmooth(x, *popt), 'r-', color='red', label='fit')

plt.legend()

plt.show()

## For Binned Data of rawDataList_2

x = np.arange(len(rawDataList_2))

x = x*0.06 #Convert x-axis to seconds.

y = np.array(rawDataList_2)

popt,pcov = curve_fit(GaussianSmooth, x, y, p0=[np.mean(rawDataList_2),np.max(rawDataList_2) - np.mean(rawDataList_2),0.25,0.0,1.5], bounds=((0., 0., 0. ,0., 0.), (1.0, 1.0, 10.0, 10.0, 10.0)), method='trf',maxfev=10000)

plt.xlabel('Time (s)')

plt.ylabel('Performance from 0-100%')

plt.title('Fit for Performance')

plt.plot(x, y, 'b+:', color='red', label='data')

plt.plot(x, GaussianSmooth(x, *popt), 'r-', color='blue', label='fit')

plt.legend()

plt.show()

POST EDIT IN RESPONSE TO COMMENT 1:

def generate_Initial_Parameters():

global parameterBounds2

parameterBounds = []

parameterBounds.append([np.mean(rawDataList) - 0.05, np.mean(rawDataList) + 0.05]) # parameter bounds for c1; 0.05 arbitrary just to give it a small window to form proper lower and upper bound

parameterBounds.append([np.max(rawDataList) - np.mean(rawDataList) - 0.05, np.max(rawDataList) - np.mean(rawDataList) + 0.05]) # parameter bounds c3

parameterBounds.append([0.125, 10.0]) # parameter bounds for Lambda; Nyquist limit, can't detect more than 8Hz in current data set. So 1/8 = 0.125. 1/0.1 = 10.

parameterBounds.append([0.0, 2*np.pi]) # parameter bounds for theta; Phase offset in radians.

parameterBounds.append([0.0, 3.0]) # parameter bounds for sigma

parameterBounds2 = ((parameterBounds[0][0], parameterBounds[1][0], parameterBounds[2][0],

parameterBounds[3][0], parameterBounds[4][0]), (parameterBounds[0][1],

parameterBounds[1][1], parameterBounds[2][1], parameterBounds[3][1],

parameterBounds[4][1]))

# "seed" the numpy random number generator for repeatable results

result = differential_evolution(sumOfSquaredError, parameterBounds, seed=3)

return result.x

popt,pcov = curve_fit(GaussianSmooth, x, y, initialParameters, bounds=parameterBounds2, maxfev=10000)