Edit:

I made following changes and got an accuracy of about 80%.

- Changed epochs to 2300

- changed learning rate to 0.000006

- changed np.random.rand to np.random.randn

epochs and learning rate i can understand, but i have no idea whats up with rand and randn.

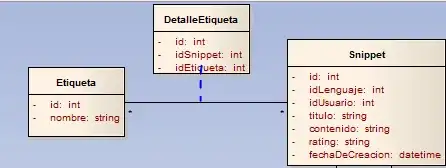

I am attempting digit classification of mnist database with a neural network of this structure

first layer => 784 neurons. Inputs not normalized.

hidden layer 1 => 30 neurons => activation function tanh

hidden layer 2 => 30 neurons => activation function tanh

output layer => 10 neurons => activation function softmax

cost function => cross entropy

learning rate is 0.000001, epochs are 30, batch size = 1

But the accuracy its attaining is just 11% and error is halting to a constant value (138328)( which is definitely bad ) after 20-25 epochs epochs

I have'nt put comments in the code, so if you find difficult in reading the code, just put in comments, i'll put comments in the code

NeuralNetwork class is

import numpy as np

import sys

import time

class NueralNetwork():

def __init__(self, input_size, hidden1_size, hidden2_size, output_size):

self.input_layer_size = input_size

self.hidden_layer1_size = hidden1_size

self.hidden_layer2_size = hidden2_size

self.output_layer_size = output_size

np.random.seed(1)

self.W1 = np.random.rand(self.input_layer_size, self.hidden_layer1_size)

self.W2 = np.random.rand(self.hidden_layer1_size, self.hidden_layer2_size)

self.W3 = np.random.rand(self.hidden_layer2_size, self.output_layer_size)

self.b1 = np.zeros((1, self.hidden_layer1_size))

self.b2 = np.zeros((1, self.hidden_layer2_size))

self.b3 = np.zeros((1, self.output_layer_size))

def train(self, input_values, label, batch_size, epochs, learning_rate, error=False):

self.batch_size = batch_size

self.learning_rate = learning_rate

print("training...")

starting_time = time.time()

N = len(input_values)

for j in range(epochs):

# "j" is the epoch number

i = 0

print("## Epoch: ", j+1, " ##")

print("Time Lapsed: ", time.time() - starting_time)

self.error(input_values, label)

while i+batch_size < N:

sys.stdout.write("\rCompleted " + str((100*i/N)) + " in epoch " + str(j+1))

sys.stdout.flush()

X = input_values[i:i+batch_size]

y = label[i:i+batch_size]

i = i+batch_size

z1 = X @ self.W1 + self.b1

a1 = np.tanh(z1)

z2 = a1 @ self.W2 + self.b2

a2 = np.tanh(z2)

z3 = a2 @ self.W3 + self.b3

a3_temp = np.exp(z3)

a3 = a3_temp / np.sum(a3_temp, keepdims=True, axis=1)

delta4 = a3

delta4[range(len(y)), y] -= 1

# print(delta3)

# print(delta3.shape)

dw3 = a2.T @ delta4

db3 = np.sum(delta4, axis=0, keepdims=True)

# print(delta4.shape, " d4")

# print(db3.shape, " db3")

delta3 = (delta4 @ self.W3.T) * (1 - np.power(a2, 2))

dw2 = np.dot(a1.T, delta3)

db2 = np.sum(delta3, axis=0)

delta2 = (delta3 @ self.W2.T) * (1 - np.power(a1, 2))

dw1 = np.dot(X.T, delta2)

db1 = np.sum(delta2, axis=0)

self.W1 += -learning_rate * dw1

self.b1 += -learning_rate * db1

self.W2 += -learning_rate * dw2

self.b2 += -learning_rate * db2

self.W3 += -learning_rate * dw3

self.b3 += -learning_rate * db3

if error:

self.error(input_values, label)

print("")

def error(self, X, y):

z1 = X @ self.W1 + self.b1

a1 = np.tanh(z1)

z2 = a1 @ self.W2 + self.b2

a2 = np.tanh(z2)

z3 = a2 @ self.W3 + self.b3

a3_temp = np.exp(z3)

a3 = a3_temp / np.sum(a3_temp, keepdims=True, axis=1)

correct_loss = -np.log(a3[range(len(y)), y])

data_loss = np.sum(correct_loss)

print(data_loss)

def show_performance(self, X, y):

print("Error is: ", end="")

z1 = X @ self.W1 + self.b1

a1 = np.tanh(z1)

z2 = a1 @ self.W2 + self.b2

a2 = np.tanh(z2)

z3 = a2 @ self.W3 + self.b3

a3_temp = np.exp(z3)

a3 = a3_temp / np.sum(a3_temp, keepdims=True, axis=1)

y_p = np.argmax(a3, axis=1)

self.error(X, y)

score = 0

for i in range(len(y)):

if y_p[i] == y[i]:

score += 1

print("Score is ", score/len(y))

train_images, train_label, test_images, test_label = initial.load_data_mnist()

nn = NueralNetwork(784, 30, 30, 10)

nn.train(train_images, train_label, 1, 20, 0.000001)

nn.show_performance(test_images, test_label)

data is coming from here. 'data' directory has the mnist database files

from mnist import MNIST

import numpy as np

def load_data_mnist():

mdata = MNIST('data')

train_images, train_labels = mdata.load_training()

test_images, test_labels = mdata.load_testing()

return np.array(train_images), np.array(train_labels), np.array(test_images), np.array(test_labels)