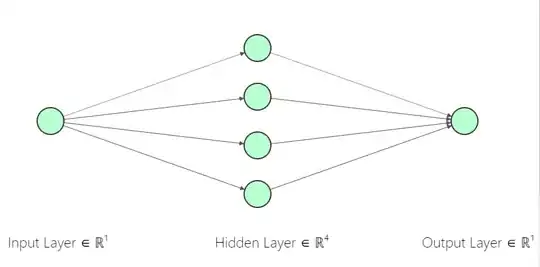

I have implemented a multilayer perceptron to predict the sin of input vectors. The vectors consist of four -1,0,1's chosen at random and a bias set to 1. The network should predict the sin of sum of the vectors contents.

eg Input = <0,1,-1,0,1> Output = Sin(0+1+(-1)+0+1)

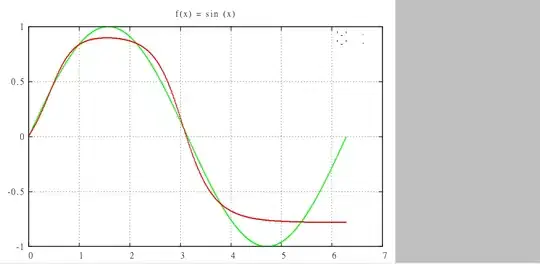

The problem I am having is that the network will never predict a negative value and many of the vectors' sin values are negative. It predicts all positive or zero outputs perfectly. I am presuming that there is a problem with updating the weights, which are updated after every epoch. Has anyone encountered this problem with NN's before? Any help at all would be great!!

note: The network has 5inputs,6hidden units in 1 hidden layer and 1 output.I am using a sigmoid function on the activations hidden and output layers, and have tried tonnes of learning rates (currently 0.1);