I have also read:

Convert a 2D array index into a 1D index

I am trying to debug my program and I would need to understand how could we convert a 2d coordinate into a 1d coordinate.

If I have an IJ coordinate, for example: (3284,1352) and we want to access an unidimensional array which is (3492,2188), how could we achieve that?

Until now I have tried:

1) I thought that you offset for each row and inside each row for each column as:

i x j = 3284 * 1352 = 4.439.968

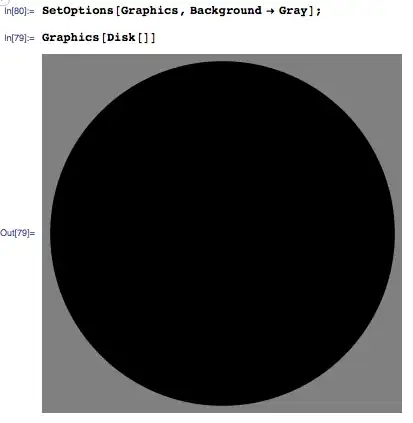

If we have that the coordinate (3284,1352) graphically corresponds to the segment which has a gray level intensity of 1:

And we try to access the unidimensional array which has for each pixel its gray value, in pixel: 4.439.968 we have:

A gray intensity of 14

Which is quite strange because of:

-> Segment number 14 is not being displayed by ITKSnap:

-> Our segment has a gray level of 1, so then we should after manually calculating which index to access, find that gray level:

2) The second way I have tried is to calculate it as:

column clicked * number of total rows + row clicked;

j * xLength + i;

In our example is:

1352 * 3492 + 3284 = 4.724.468

If we try to find that pixel in the data:

We find that the gray level is 0, which corresponds to the background.

What is wrong with the conversion?

Could you help me please?