I am currently struggling with this issue since about 3 month. The Crawler seems to fetch pages every 10 minutes but seems to do nothing in between. With an overall very slow throughput. I am crawling 300 domains in parallel. Which should make around 30 pages/second with a crawling delay of 10 seconds. Currently it is about 2 pages per second.

The topology runs on a PC with

- 8GB Memory

- Normal Harddrive

- Core Duo CPU

- Ubuntu 16.04

Elasticsearch is installed on another machine with the same specs.

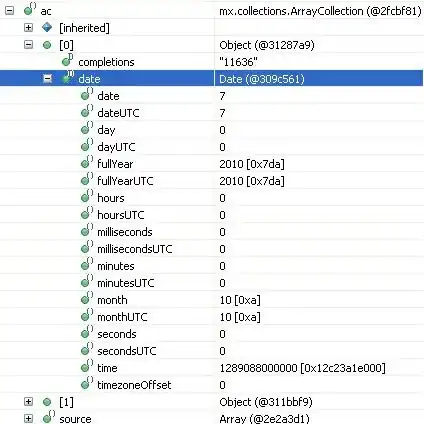

Here you can see the metrics from the Grafana Dashboard

They are also reflected in the process latency seen in the Storm UI:

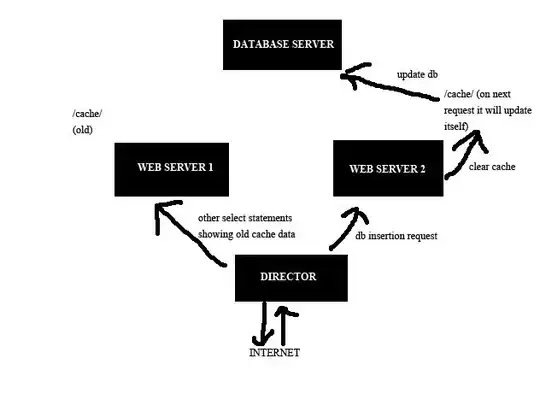

My current architecture for Stormcrawler is:

spouts:

- id: "spout"

className: "com.digitalpebble.stormcrawler.elasticsearch.persistence.AggregationSpout"

parallelism: 25

bolts:

- id: "partitioner"

className: "com.digitalpebble.stormcrawler.bolt.URLPartitionerBolt"

parallelism: 1

- id: "fetcher"

className: "com.digitalpebble.stormcrawler.bolt.FetcherBolt"

parallelism: 6

- id: "sitemap"

className: "com.digitalpebble.stormcrawler.bolt.SiteMapParserBolt"

parallelism: 1

- id: "parse"

className: "com.digitalpebble.stormcrawler.bolt.JSoupParserBolt"

parallelism: 1

- id: "index"

className: "de.hpi.bpStormcrawler.BPIndexerBolt"

parallelism: 1

- id: "status"

className: "com.digitalpebble.stormcrawler.elasticsearch.persistence.StatusUpdaterBolt"

parallelism: 4

- id: "status_metrics"

className: "com.digitalpebble.stormcrawler.elasticsearch.metrics.StatusMetricsBolt"

parallelism: 1

with the configuration (here the most relevant part):

config:

topology.workers: 1

topology.message.timeout.secs: 300

topology.max.spout.pending: 100

topology.debug: false

fetcher.threads.number: 50

worker.heap.memory.mb: 2049

partition.url.mode: byDomain

fetcher.server.delay: 10.0

and here the storm configuration (also just the relevant part):

nimbus.childopts: "-Xmx1024m -Djava.net.preferIPv4Stack=true"

ui.childopts: "-Xmx768m -Djava.net.preferIPv4Stack=true"

supervisor.childopts: "-Djava.net.preferIPv4Stack=true"

worker.childopts: "-Xmx1500m -Djava.net.preferIPv4Stack=true"Do you have any idea what could be the problem? Or is it just a problem of the hardware?

What I already tried out

- Increase the fetcher.server.delay to a higher and lower value which didn't change anything

- Decrease and increase the number of fetcher threads

- Played around with the parallelism

- Calculated if it is the network bandwidth. With a bandwidth of 400mbit/s and an average page size of 0.5 MB it would be 15MB/s which would be 120mbit/s which should also not the problem

- Increased the number of workers

Do you have any more ideas which I should check out or reasons that could explain the slow fetching? Maybe it is also just the slow hardware? Or the bottleneck is Elasticsearch?

Thank you very much in advance

EDIT:

I changed the topology to two workers and have a recurring error

2018-07-03 17:18:46.326 c.d.s.e.p.AggregationSpout Thread-33-spout-executor[26 26] [INFO] [spout #12] Populating buffer with nextFetchDate <= 2018-06-21T17:52:42+02:00

2018-07-03 17:18:46.327 c.d.s.e.p.AggregationSpout I/O dispatcher 26 [ERROR] Exception with ES query

java.io.IOException: Unable to parse response body for Response{requestLine=POST /status/status/_search?typed_keys=true&ignore_unavailable=false&expand_wildcards=open&allow_no_indices=true&preference=_shards%3A12&search_type=query_then_fetch&batched_reduce_size=512 HTTP/1.1, host=http://ts5565.byod.hpi.de:9200, response=HTTP/1.1 200 OK}

at org.elasticsearch.client.RestHighLevelClient$1.onSuccess(RestHighLevelClient.java:548) [stormjar.jar:?]

at org.elasticsearch.client.RestClient$FailureTrackingResponseListener.onSuccess(RestClient.java:600) [stormjar.jar:?]

at org.elasticsearch.client.RestClient$1.completed(RestClient.java:355) [stormjar.jar:?]

at org.elasticsearch.client.RestClient$1.completed(RestClient.java:346) [stormjar.jar:?]

at org.apache.http.concurrent.BasicFuture.completed(BasicFuture.java:119) [stormjar.jar:?]

at org.apache.http.impl.nio.client.DefaultClientExchangeHandlerImpl.responseCompleted(DefaultClientExchangeHandlerImpl.java:177) [stormjar.jar:?]

at org.apache.http.nio.protocol.HttpAsyncRequestExecutor.processResponse(HttpAsyncRequestExecutor.java:436) [stormjar.jar:?]

at org.apache.http.nio.protocol.HttpAsyncRequestExecutor.inputReady(HttpAsyncRequestExecutor.java:326) [stormjar.jar:?]

at org.apache.http.impl.nio.DefaultNHttpClientConnection.consumeInput(DefaultNHttpClientConnection.java:265) [stormjar.jar:?]

at org.apache.http.impl.nio.client.InternalIODispatch.onInputReady(InternalIODispatch.java:81) [stormjar.jar:?]

at org.apache.http.impl.nio.client.InternalIODispatch.onInputReady(InternalIODispatch.java:39) [stormjar.jar:?]

at org.apache.http.impl.nio.reactor.AbstractIODispatch.inputReady(AbstractIODispatch.java:114) [stormjar.jar:?]

at org.apache.http.impl.nio.reactor.BaseIOReactor.readable(BaseIOReactor.java:162) [stormjar.jar:?]

at org.apache.http.impl.nio.reactor.AbstractIOReactor.processEvent(AbstractIOReactor.java:337) [stormjar.jar:?]

at org.apache.http.impl.nio.reactor.AbstractIOReactor.processEvents(AbstractIOReactor.java:315) [stormjar.jar:?]

at org.apache.http.impl.nio.reactor.AbstractIOReactor.execute(AbstractIOReactor.java:276) [stormjar.jar:?]

at org.apache.http.impl.nio.reactor.BaseIOReactor.execute(BaseIOReactor.java:104) [stormjar.jar:?]

at org.apache.http.impl.nio.reactor.AbstractMultiworkerIOReactor$Worker.run(AbstractMultiworkerIOReactor.java:588) [stormjar.jar:?]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_171]

Caused by: java.lang.NullPointerExceptionStill the crawling process seems much more balanced but still does not fetch a lot of links

Also after running the topology for some weeks the latency went up a lot