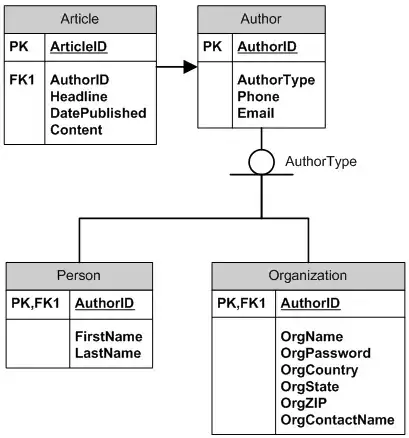

I am playing with Rule 110 of Wolfram cellular automata. Given line of zeroes and ones, you can calculate next line with these rules:

Starting with 00000000....1 in the end you get this sequence:

Just of curiosity I decided to approximate these rules with a polynomial, so that cells could be not only 0 and 1, but also gray color in between:

def triangle(x,y,z,v0):

v=(y + y * y + y * y * y - 3. * (1. + x) * y * z + z * (1. + z + z * z)) / 3.

return (v-v0)*(v-v0)

so if x,y,z and v0 matches any of these rules from the table, it will return 0, and positive nonzero value otherwise.

Next I've added all possible groups of 4 neighbors into single sum, which will be zero for integer solutions:

def eval():

s = 0.

for i in range(W - 1):

for j in range(1, W + 1):

xx = x[i, (j - 1) % W]

yy = x[i, j % W]

zz = x[i, (j + 1) % W]

r = x[i + 1, j % W]

s += triangle(xx, yy, zz, r)

for j in range(W - 1): s += x[0, j] * x[0, j]

s += (1 - x[0, W - 1]) * (1 - x[0, W - 1])

return torch.sqrt(s)

Also in the bottom of this function I add ordinary conditions for first line, so that all elements are 0 except last one, which is 1. Finally I've decided to minimize this sum of squares on W*W matrix with pytorch:

x = Variable(torch.DoubleTensor(W,W).zero_(), requires_grad=True)

opt = torch.optim.LBFGS([x],lr=.1)

for i in range(15500):

def closure():

opt.zero_grad()

s=eval()

s.backward()

return s

opt.step(closure)

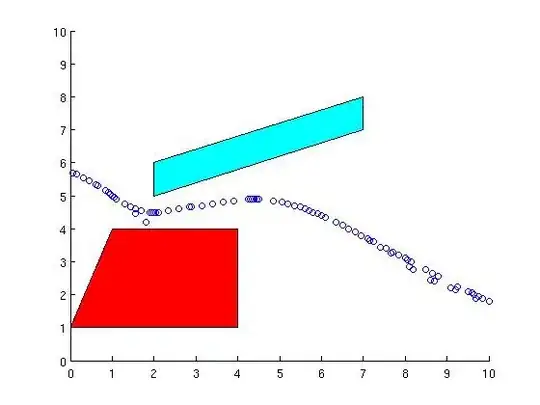

Here is full code, you can try it yourself. The problem is that for 10*10 it converges to correct solution in ~20 steps:

But if I take 15*15 board, it never finishes convergence:

The graph on the right shows how sum of squares is changing with each next iteration and you can see that it never reaches zero. My question is why this happens and how can I fix this. Tried different pytorch optimisers, but all of them perform worse then LBFGS. Tried different learning rates. Any ideas why this happen and how I can reach final point during optimisation?

UPD: improved convergence graph, log of SOS:

UPD2: I also tried doing same in C++ with dlib, and I don't have any convergency issues there, it goes much deeper in much less time:

I am using this code for optimisation in C++:

find_min_using_approximate_derivatives(bfgs_search_strategy(),

objective_delta_stop_strategy(1e-87),

s, x, -1)