I have the following dataset represented like numpy array

direccion_viento_pos

Out[32]:

array([['S'],

['S'],

['S'],

...,

['SO'],

['NO'],

['SO']], dtype=object)

The dimension of this array is:

direccion_viento_pos.shape

(17249, 8)

I am using python and scikit learn to encode these categorical variables in this way:

from __future__ import unicode_literals

import pandas as pd

import numpy as np

# from sklearn import preprocessing

# from matplotlib import pyplot as plt

from sklearn.preprocessing import MinMaxScaler

from sklearn.preprocessing import LabelEncoder, OneHotEncoder

Then I create a label encoder object:

labelencoder_direccion_viento_pos = LabelEncoder()

I take the column position 0 (the unique column) of the direccion_viento_pos and apply the fit_transform() method addressing all their rows:

direccion_viento_pos[:, 0] = labelencoder_direccion_viento_pos.fit_transform(direccion_viento_pos[:, 0])

My direccion_viento_pos is of this way:

direccion_viento_pos[:, 0]

array([5, 5, 5, ..., 7, 3, 7], dtype=object)

Until this moment, each row/observation of direccion_viento_pos have a numeric value, but I want solve the inconvenient of weight in the sense that there are rows with a value more higher than others.

Due to this, I create the dummy variables, which according to this reference are:

A Dummy variable or Indicator Variable is an artificial variable created to represent an attribute with two or more distinct categories/levels

Then, in my direccion_viento_pos context, I have 8 values

SO- Sur oesteSE- Sur esteS- SurN- NorteNO- Nor oesteNE- Nor esteO- OesteE- Este

This mean, 8 categories.

Next, I create a OneHotEncoder object with the categorical_features attribute which specifies what features will be treated like categorical variables.

onehotencoder = OneHotEncoder(categorical_features = [0])

And apply this onehotencoder to our direccion_viento_pos matrix.

direccion_viento_pos = onehotencoder.fit_transform(direccion_viento_pos).toarray()

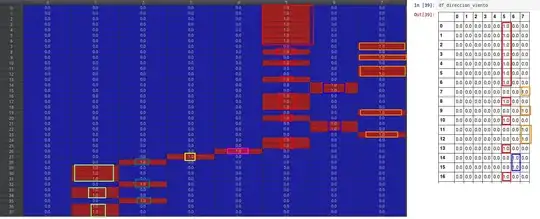

My direccion_viento_pos with their categorized variables has stayed so:

direccion_viento_pos

array([[0., 0., 0., ..., 1., 0., 0.],

[0., 0., 0., ..., 1., 0., 0.],

[0., 0., 0., ..., 1., 0., 0.],

...,

[0., 0., 0., ..., 0., 0., 1.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 1.]])

Then, until here, I've created dummy variables to each category.

I wanted to narrate this process, to arrive at my question.

If these dummy encoder variables already in a 0-1 range, is necessary apply the MinMaxScaler feature scaling?

Some say that it is not necessary to scale these fictitious variables. Others say that if necessary because we want accuracy in predictions

I ask this question due to when I apply the MinMaxScaler with the feature_range=(0, 1)

my values have been changed in some positions ... despite to still keep this scale.

What is the best option which can I have to choose with respect to my dataset direccion_viento_pos