I'm going to be testing several python apis with Locust. The backend uses Google appengine and uses automatic scaling. So determining the resource utilization isn't a top priority for me. My goal is only to test api response times for higher number of concurrent requests and determine any threading issues.

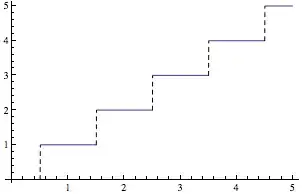

I need to run the tests for 1 million users. I'm going to be running the test distributed and I'm going to follow a staircase input ramp up pattern of ramping up to 100k users and keep the steady load of 100k users for 30 mins before moving to 200k concurrent users and so on, represented below:

So I want to ensure I'm making exactly X requests per sec at any given time. My understanding is that with Locust we can only control total number of users and the hatch rate.

So if I wanted to synchronize the requests such a way that it sends exactly X requests per sec, is there a way to achieve that?

I have gone through the Locust documentation and also some threads but I haven't found anything that satisfactorily answers my question. I don't want to rely on merely knowing there are X users sending requests, I want to ensure concurrency level is tested correctly at a specified requests per sec rate.

I'm hoping my question is detailed enough and not missing any crucial information.