Can I simply take the reciprocal of the wall clock time ?

No,

true Speedup figures require comparing Apples to Apples :

This means, that an original, pure-[SERIAL] process-scheduling ought be compared with any other scenario, where parts may get modified, so as to use some sort of parallelism ( the parallel fraction may get re-organised, so as to run on N CPUs / computing-resources, whereas the serial fraction is left as was ).

This obviously means, that the original [SERIAL]-code was extended ( both in code ( #pragma-decorators, OpenCL-modifications, CUDA-{ host_to_dev | dev_to_host }-tooling etc.), and in time( to execute these added functionalities, that were not present in the original [SERIAL]-code, to benchmark against ), so as to add some new sections, where the ( possible [PARALLEL] ) other part of the processing will take place.

This comes at cost -- add-on overhead costs ( to setup and to terminate and to communicate data from [SERIAL]-part there, to the [PARALLEL]-part and back ) -- which all adds additional [SERIAL]-part workload ( and execution time + latency ).

For more details, feel free to read section Criticism in article on re-formulated Amdahl's Law.

The [PARALLEL]-portion seems interesting, yet the Speedup principal ceiling is in the [SERIAL]-portion duration ( s = 1 - p ) in the original,

but to which add-on durations and added latency costs need to get added as accumulated alongside the "organisation" of work from an original, pure-[SERIAL], to the wished-to-have [PARALLEL]-code execution process scheduling, if realistic evaluation is to be achieved

run the test on a single processor and set that as the serial time, ...,

as @VictorSong has proposed sounds easy, but benchmarks an incoherent system ( not the pure-[SERIAL] original) and records a skewed yardstick to compare against.

This is the reason, why fair methods ought be engineered. The pure-[SERIAL] original code-execution can be time-stamped, so as to show the real durations of unchanged-parts, but the add-on overhead times have to get incorporated into the add-on extensions of the serial part of the now parallelised tests.

The re-articulated Amdahl's Law of Diminishing Returns explains this, altogether with impacts from add-on overheads and also from atomicity-of-processing, that will not allow further fictions of speedup growth, given more computing resources are added, but a parallel-fraction of the processing does not permit further split of task workloads, due to some form of its internal atomicity-of-processing, that cannot be further divided in spite of having free processors available.

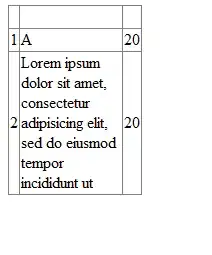

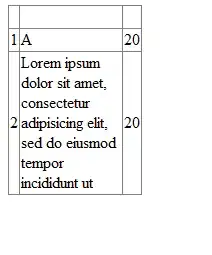

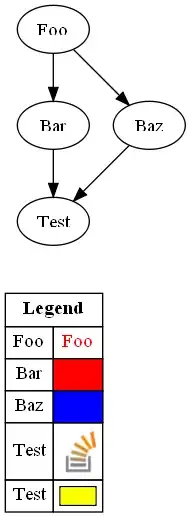

The simplified of the two, re-formulated expressions stands like this :

1

S = __________________________; where s, ( 1 - s ), N were defined above

( 1 - s ) pSO:= [PAR]-Setup-Overhead add-on

s + pSO + _________ + pTO pTO:= [PAR]-Terminate-Overhead add-on

N

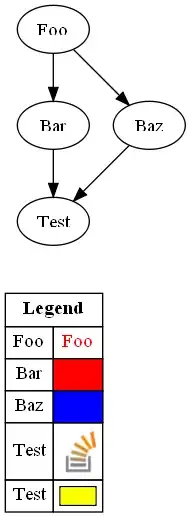

Some interactive GUI-tools for further visualisations of the add-on overhead-costs are available for interactive parametric simulations here - just move the p-slider towards the actual value of the ( 1 - s ) ~ having a non-zero fraction of the very [SERIAL]-part of the original code :