I have a problem with Russian plates. When I want to classifychars with openalpr tool I get something below:

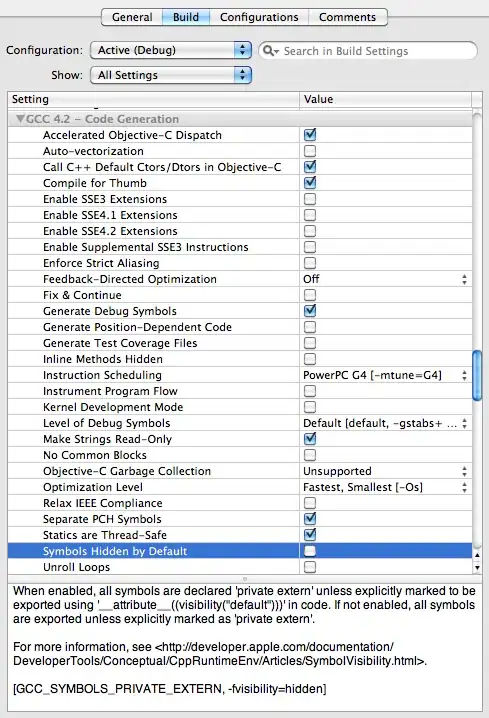

OCR cutted my upper fragment of numbers. I generate new .conf file for this country with this parameters:

char_analysis_min_pct = 0.29

char_analysis_height_range = 0.20

char_analysis_height_step_size = 0.10

char_analysis_height_num_steps = 6

segmentation_min_speckle_height_percent = 0.3

segmentation_min_box_width_px = 6

segmentation_min_charheight_percent = 0.1;

segmentation_max_segment_width_percent_vs_average = 1.95;

plate_width_mm= 520

plate_height_mm = 112

multiline = 1

char_height_mm = 58

char_width_mm = 44

char_whitespace_top_mm = 18

char_whitespace_bot_mm = 18

template_max_width_px = 300

template_max_height_px = 64

; Higher sensitivity means less lines

plateline_sensitivity_vertical = 10

plateline_sensitivity_horizontal = 45

; Regions smaller than this will be disqualified

min_plate_size_width_px = 65

min_plate_size_height_px = 18

; Results with fewer or more characters will be discarded

postprocess_min_characters = 8

postprocess_max_characters = 9

;detector_file= eu.xml

ocr_language = lamh

;Override for postprocess letters/numbers regex.

postprocess_regex_letters = [A,B,C,E,H,K,M,O,P,T,X,Y]

postprocess_regex_numbers = [0-9]

; Whether the plate is always dark letters on light background, light letters on dark background, or both

; value can be either always, never, or auto

invert = auto

Anyone have a idea how to solve it?

Starting I used OCR file from this repository https://github.com/KostyaKulakov/Russian_System_of_ANPR

Thank you.