I'm trying to write a method that will return a Hibernate object based on a unique but non-primary key. If the entity already exists in the database I want to return it, but if it doesn't I want to create a new instance and save it before returning.

UPDATE: Let me clarify that the application I'm writing this for is basically a batch processor of input files. The system needs to read a file line by line and insert records into the db. The file format is basically a denormalized view of several tables in our schema so what I have to do is parse out the parent record either insert it into the db so I can get a new synthetic key, or if it already exists select it. Then I can add additional associated records in other tables that have foreign keys back to that record.

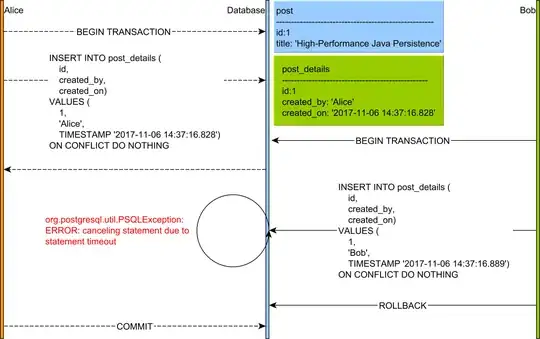

The reason this gets tricky is that each file needs to be either totally imported or not imported at all, i.e. all inserts and updates done for a given file should be a part of one transaction. This is easy enough if there's only one process that's doing all the imports, but I'd like to break this up across multiple servers if possible. Because of these constraints I need to be able to stay inside one transaction, but handle the exceptions where a record already exists.

The mapped class for the parent records looks like this:

@Entity

public class Foo {

@Id

@GeneratedValue(strategy = IDENTITY)

private int id;

@Column(unique = true)

private String name;

...

}

My initial attempt at writting this method is as follows:

public Foo findOrCreate(String name) {

Foo foo = new Foo();

foo.setName(name);

try {

session.save(foo)

} catch(ConstraintViolationException e) {

foo = session.createCriteria(Foo.class).add(eq("name", name)).uniqueResult();

}

return foo;

}

The problem is when the name I'm looking for exists, an org.hibernate.AssertionFailure exception is thrown by the call to uniqueResult(). The full stack trace is below:

org.hibernate.AssertionFailure: null id in com.searchdex.linktracer.domain.LinkingPage entry (don't flush the Session after an exception occurs)

at org.hibernate.event.def.DefaultFlushEntityEventListener.checkId(DefaultFlushEntityEventListener.java:82) [hibernate-core-3.6.0.Final.jar:3.6.0.Final]

at org.hibernate.event.def.DefaultFlushEntityEventListener.getValues(DefaultFlushEntityEventListener.java:190) [hibernate-core-3.6.0.Final.jar:3.6.0.Final]

at org.hibernate.event.def.DefaultFlushEntityEventListener.onFlushEntity(DefaultFlushEntityEventListener.java:147) [hibernate-core-3.6.0.Final.jar:3.6.0.Final]

at org.hibernate.event.def.AbstractFlushingEventListener.flushEntities(AbstractFlushingEventListener.java:219) [hibernate-core-3.6.0.Final.jar:3.6.0.Final]

at org.hibernate.event.def.AbstractFlushingEventListener.flushEverythingToExecutions(AbstractFlushingEventListener.java:99) [hibernate-core-3.6.0.Final.jar:3.6.0.Final]

at org.hibernate.event.def.DefaultAutoFlushEventListener.onAutoFlush(DefaultAutoFlushEventListener.java:58) [hibernate-core-3.6.0.Final.jar:3.6.0.Final]

at org.hibernate.impl.SessionImpl.autoFlushIfRequired(SessionImpl.java:1185) [hibernate-core-3.6.0.Final.jar:3.6.0.Final]

at org.hibernate.impl.SessionImpl.list(SessionImpl.java:1709) [hibernate-core-3.6.0.Final.jar:3.6.0.Final]

at org.hibernate.impl.CriteriaImpl.list(CriteriaImpl.java:347) [hibernate-core-3.6.0.Final.jar:3.6.0.Final]

at org.hibernate.impl.CriteriaImpl.uniqueResult(CriteriaImpl.java:369) [hibernate-core-3.6.0.Final.jar:3.6.0.Final]

Does anyone know what is causing this exception to be thrown? Does hibernate support a better way of accomplishing this?

Let me also preemptively explain why I'm inserting first and then selecting if and when that fails. This needs to work in a distributed environment so I can't synchronize across the check to see if the record already exists and the insert. The easiest way to do this is to let the database handle this synchronization by checking for the constraint violation on every insert.