I use a recurrent network (in special GRU) for predict a time serie with a lenght of 90 occurrences. The type of data is multivariante, and a follow this example.

Option 1:

I use keras for develop the rnn

n_train_quarter = int(len(serie) * 0.75)

train = values[:n_train_quarter, :]

test = values[n_train_quarter:, :]

X_train, y_train = train[:, :-1], train[:, - 1]

X_test, y_test = test[:, :-1], test[:, - 1]

# All parameter can be changes kernel, activation, optimizer, ...

model = Sequential()

model.add(GRU(64, input_shape=(X_train.shape[1], X_train.shape[2]),return_sequences=True))

model.add(Dropout(0.5))

# n is random

for i in range(n)

model.add(GRU(64,kernel_initializer = 'uniform', return_sequences=True))

model.add(Dropout(0.5))

model.add(Flatten())

model.add(Dense(1))

model.add(Activation('softmax'))

#Compile and fit

model.compile(loss='mean_squared_error', optimizer='SGD')

early_stopping = EarlyStopping(monitor='val_loss', patience=50)

checkpointer = ModelCheckpoint(filepath=Checkpoint_mode, verbose=0, save_weights_only=False, save_best_only=True)

model.fit(X_train, y_train,

batch_size=256,

epochs=64,

validation_split=0.25,

callbacks=[early_stopping, checkpointer],

verbose=0,

shuffle=False)

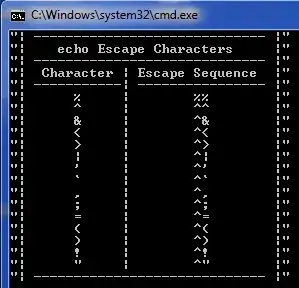

And the result with less error look like the image (there are various experiment with same result)

Option 2:

X_train, X_test, y_train, y_test = train_test_split(X, Y, test_size = 0.33, random_state = 42)

# All parameter can be changes kernel, activation, optimizer, ...

model = Sequential()

model.add(GRU(64, input_shape=(X_train.shape[1], X_train.shape[2]),return_sequences=True))

model.add(Dropout(0.5))

# n is random

for i in range(n)

model.add(GRU(64,kernel_initializer = 'uniform', return_sequences=True))

model.add(Dropout(0.5))

model.add(Flatten())

model.add(Dense(1))

model.add(Activation('softmax'))

#Compile and fit

model.compile(loss='mean_squared_error', optimizer='SGD')

early_stopping = EarlyStopping(monitor='val_loss', patience=50)

checkpointer = ModelCheckpoint(filepath=Checkpoint_mode, verbose=0, save_weights_only=False, save_best_only=True)

model.fit(X_train, y_train,

batch_size=256,

epochs=64,

validation_split=0.25,

callbacks=[early_stopping, checkpointer],

verbose=0,

shuffle=False)

And the result with less error print as

Can use "train_test_split" of sklearn with random select for the data?

Why is better the result with secuential data than with random selection data, if GRU is better with secuential data?