I'm a mobile game developer using Unity Engine.

Here is my problem:

I tried to render the static scene stuffs into a render target with color buffer and depth buffer, with which i render to the following frames before the dynamic objects are rendered if the game main player's viewpoint stays the same. My goal is to reduce some of draw calls as well as to save some power for mobile devices. This strategy saves up to 20% power in our MMO mobile game consumption on android devices FYI.

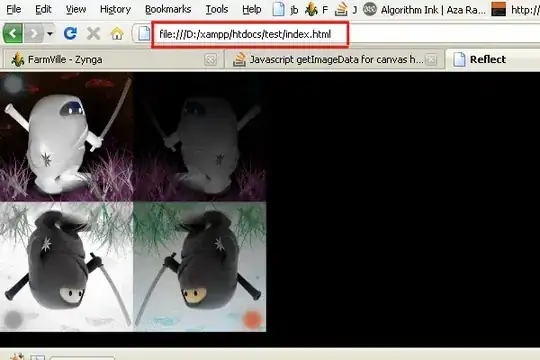

The following pics are screen shot from my test project. The sphere,cube and terrain are static objects, and the red cylinder is moving.

you can see the depth test result is wrong on android.

iOS device works fine, The depth test is right, and the render result is almost the same as the optimization is off. Notice that the shadow is not right but we ignore it for now.

However the result on Android is not good. The moving cylinder is partly occluded by the cube and the occlusion is not stable between frames.

The results seem that the depth buffer precision is not enough. Any ideas about this problem?

I Googled this problem, but no straight answers. Some said we cant read depth buffer on GLES.

https://forum.unity.com/threads/poor-performance-of-updatedepthtexture-why-is-it-even-needed.197455/ And then there are cases where platforms don't support reading from the Z buffer at all (GLES when no GL_OES_depth_texture is exposed; or Direct3D9 when no INTZ hack is present in the drivers; or desktop GL on Mac with some buggy Radeon drivers etc.).

Is this true?