I am trying to overfit a handwriting recognition model of this architecture:

features = slim.conv2d(features, 16, [3, 3])

features = slim.max_pool2d(features, 2)

features = mdrnn(features, 16)

features = slim.conv2d(features, 32, [3, 3])

features = slim.max_pool2d(features, 2)

features = mdrnn(features, 32)

features = slim.conv2d(features, 64, [3, 3])

features = slim.max_pool2d(features, 2)

features = mdrnn(features, 64)

features = slim.conv2d(features, 128, [3, 3])

features = mdrnn(features, 128)

features = slim.max_pool2d(features, 2)

features = slim.conv2d(features, 256, [3, 3])

features = slim.max_pool2d(features, 2)

features = mdrnn(features, 256)

features = _reshape_to_rnn_dims(features)

features = bidirectional_rnn(features, 128)

features = bidirectional_rnn(features, 128)

features = bidirectional_rnn(features, 128)

features = bidirectional_rnn(features, 128)

features = bidirectional_rnn(features, 128)

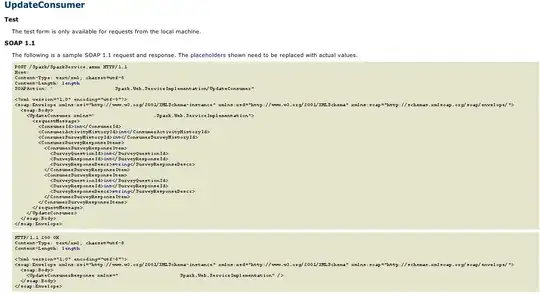

With this mdrnn code from tensorflow (with a few modifications):

def mdrnn(inputs, num_hidden):

with tf.variable_scope(scope, "multidimensional_rnn", [inputs]):

hidden_sequence_horizontal = _bidirectional_rnn_scan(inputs,

num_hidden // 2)

with tf.variable_scope("vertical"):

transposed = tf.transpose(hidden_sequence_horizontal, [0, 2, 1, 3])

output_transposed = _bidirectional_rnn_scan(transposed, num_hidden // 2)

output = tf.transpose(output_transposed, [0, 2, 1, 3])

return output

def _bidirectional_rnn_scan(inputs, num_hidden):

with tf.variable_scope("BidirectionalRNN", [inputs]):

height = inputs.get_shape().as_list()[1]

inputs = images_to_sequence(inputs)

output_sequence = bidirectional_rnn(inputs, num_hidden)

output = sequence_to_images(output_sequence, height)

return output

def images_to_sequence(inputs):

_, _, width, num_channels = _get_shape_as_list(inputs)

s = tf.shape(inputs)

batch_size, height = s[0], s[1]

return tf.reshape(inputs, [batch_size * height, width, num_channels])

def sequence_to_images(tensor, height):

num_batches, width, depth = tensor.get_shape().as_list()

if num_batches is None:

num_batches = -1

else:

num_batches = num_batches // height

reshaped = tf.reshape(tensor,

[num_batches, width, height, depth])

return tf.transpose(reshaped, [0, 2, 1, 3])

def bidirectional_rnn(inputs, num_hidden, concat_output=True,

scope=None):

with tf.variable_scope(scope, "bidirectional_rnn", [inputs]):

cell_fw = rnn.LSTMCell(num_hidden)

cell_bw = rnn.LSTMCell(num_hidden)

outputs, _ = tf.nn.bidirectional_dynamic_rnn(cell_fw,

cell_bw,

inputs,

dtype=tf.float32)

if concat_output:

return tf.concat(outputs, 2)

return outputs

The training ctc_loss decreases but it doesn't converge even after a thousand epochs. The label error rate just fluctuates.

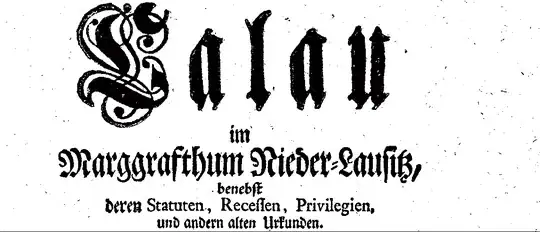

I preprocess the image such that it looks like this:

I also noticed that the network generates the same predictions at some points:

INFO:tensorflow:outputs = [[51 42 70 42 34 42 34 42 34 29 42 29 42 29 42 29 42 29 42 29 42 29 42 29

42 29 4 72 42 58 20]] (1.156 sec)

INFO:tensorflow:labels = [[38 78 52 29 70 51 78 8 1 78 15 8 1 22 78 52 4 24 78 28 3 9 8 15

11 14 13 13 78 2 4 1 16]] (1.156 sec)

INFO:tensorflow:label_error_rate = 0.93939394 (1.156 sec)

INFO:tensorflow:global_step/sec: 0.888003

INFO:tensorflow:outputs = [[51 42 70 42 34 42 34 42 34 29 42 29 42 29 42 29 42 29 42 29 42 29 42 29

42 29 4 65 42 58 20]] (1.126 sec)

INFO:tensorflow:labels = [[38 78 52 29 70 51 78 8 1 78 15 8 1 22 78 52 4 24 78 28 3 9 8 15

11 14 13 13 78 2 4 1 16]] (1.126 sec)

INFO:tensorflow:label_error_rate = 0.969697 (1.126 sec)

INFO:tensorflow:global_step/sec: 0.866796

INFO:tensorflow:outputs = [[51 42 70 42 34 42 34 42 34 29 42 29 42 29 42 29 42 29 42 29 42 29 42 29

42 29 4 65 42 58 20]] (1.154 sec)

INFO:tensorflow:labels = [[38 78 52 29 70 51 78 8 1 78 15 8 1 22 78 52 4 24 78 28 3 9 8 15

11 14 13 13 78 2 4 1 16]] (1.154 sec)

INFO:tensorflow:label_error_rate = 0.969697 (1.154 sec)

INFO:tensorflow:global_step/sec: 0.88832

INFO:tensorflow:outputs = [[51 42 70 42 34 42 34 42 34 29 42 29 42 29 42 29 42 29 42 29 42 29 42 29

42 29 4 65 42 58 20]] (1.126 sec)

INFO:tensorflow:labels = [[38 78 52 29 70 51 78 8 1 78 15 8 1 22 78 52 4 24 78 28 3 9 8 15

11 14 13 13 78 2 4 1 16]] (1.126 sec)

INFO:tensorflow:label_error_rate = 0.969697 (1.126 sec)

Any reason why this is happening? Here's a small reproducible example I've made https://github.com/selcouthlyBlue/CNN-LSTM-CTC-HIGH-LOSS

Update

When I changed the conversion from this:

outputs = tf.reshape(inputs, [-1, num_outputs])

logits = slim.fully_connected(outputs, num_classes)

logits = tf.reshape(logits, [num_steps, -1, num_classes])

To this:

outputs = tf.reshape(inputs, [-1, num_outputs])

logits = slim.fully_connected(outputs, num_classes)

logits = tf.reshape(logits, [-1, num_steps, num_classes])

logits = tf.transpose(logits, (1, 0, 2))

The performance somehow improved:

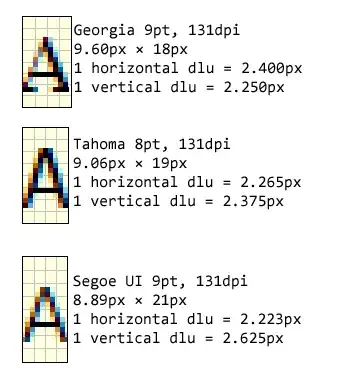

(Removed the mdrnn layers here)

(2nd RUN)

(Added back the mdrnn layers)

But the loss is still not going down to zero (or getting close to it), and the label error rate is still fluctuting.

After changing the optimizer from Adam to RMSProp with decay rate of 0.9, the loss now converges!

But the label error rate still fluctuates. However, it should go down now with the loss converging.

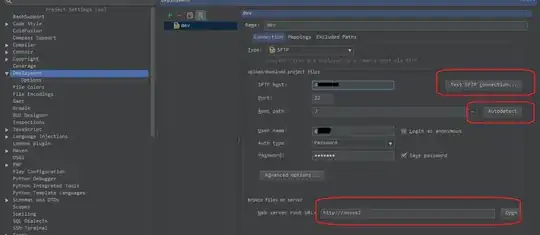

More updates

I tried it on the real dataset I have, and it did improve!

Before

After

But the label error rate is increasing for some reason still unknown.