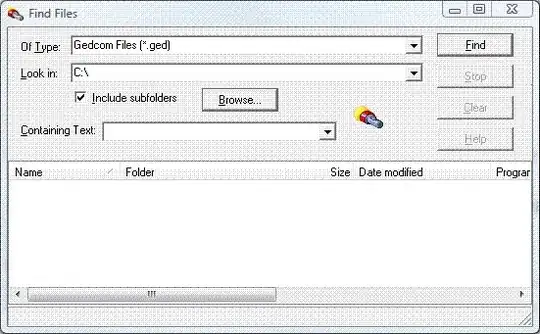

I have a FindFile routine in my program which will list files, but if the "Containing Text" field is filled in, then it should only list files containing that text.

If the "Containing Text" field is entered, then I search each file found for the text. My current method of doing that is:

var

FileContents: TStringlist;

begin

FileContents.LoadFromFile(Filepath);

if Pos(TextToFind, FileContents.Text) = 0 then

Found := false

else

Found := true;

The above code is simple, and it generally works okay. But it has two problems:

It fails for very large files (e.g. 300 MB)

I feel it could be faster. It isn't bad, but why wait 10 minutes searching through 1000 files, if there might be a simple way to speed it up a bit?

I need this to work for Delphi 2009 and to search text files that may or may not be Unicode. It only needs to work for text files.

So how can I speed this search up and also make it work for very large files?

Bonus: I would also want to allow an "ignore case" option. That's a tougher one to make efficient. Any ideas?

Solution:

Well, mghie pointed out my earlier question How Can I Efficiently Read The First Few Lines of Many Files in Delphi, and as I answered, it was different and didn't provide the solution.

But he got me thinking that I had done this before and I had. I built a block reading routine for large files that breaks it into 32 MB blocks. I use that to read the input file of my program which can be huge. The routine works fine and fast. So step one is to do the same for these files I am looking through.

So now the question was how to efficiently search within those blocks. Well I did have a previous question on that topic: Is There An Efficient Whole Word Search Function in Delphi? and RRUZ pointed out the SearchBuf routine to me.

That solves the "bonus" as well, because SearchBuf has options which include Whole Word Search (the answer to that question) and MatchCase/noMatchCase (the answer to the bonus).

So I'm off and running. Thanks once again SO community.