If you want to use the model in my code (the link you passed), you need to have the data correctly shaped: (1 sequence, total_time_steps, 5 features)

Important: I don't know if this is the best way or the best model to do this, but this model is predicting 7 time steps ahead of the input (time_shift=7)

Data and initial vars

fi = 'pollution.csv'

raw = pd.read_csv(fi, delimiter=',')

raw = raw.drop('Dates', axis=1)

print("raw shape:")

print (raw.shape)

#(1789,5) - 1789 time steps / 5 features

scaler = MinMaxScaler(feature_range=(-1, 1))

raw = scaler.fit_transform(raw)

time_shift = 7 #shift is the number of steps we are predicting ahead

n_rows = raw.shape[0] #n_rows is the number of time steps of our sequence

n_feats = raw.shape[1]

train_size = int(n_rows * 0.8)

#I couldn't understand how "ds" worked, so I simply removed it because in the code below it's not necessary

#getting the train part of the sequence

train_data = raw[:train_size, :] #first train_size steps, all 5 features

test_data = raw[train_size:, :] #I'll use the beginning of the data as state adjuster

#train_data = shuffle(train_data) !!!!!! we cannot shuffle time steps!!! we lose the sequence doing this

x_train = train_data[:-time_shift, :] #the entire train data, except the last shift steps

x_test = test_data[:-time_shift,:] #the entire test data, except the last shift steps

x_predict = raw[:-time_shift,:] #the entire raw data, except the last shift steps

y_train = train_data[time_shift:, :]

y_test = test_data[time_shift:,:]

y_predict_true = raw[time_shift:,:]

x_train = x_train.reshape(1, x_train.shape[0], x_train.shape[1]) #ok shape (1,steps,5) - 1 sequence, many steps, 5 features

y_train = y_train.reshape(1, y_train.shape[0], y_train.shape[1])

x_test = x_test.reshape(1, x_test.shape[0], x_test.shape[1])

y_test = y_test.reshape(1, y_test.shape[0], y_test.shape[1])

x_predict = x_predict.reshape(1, x_predict.shape[0], x_predict.shape[1])

y_predict_true = y_predict_true.reshape(1, y_predict_true.shape[0], y_predict_true.shape[1])

print("\nx_train:")

print (x_train.shape)

print("y_train")

print (y_train.shape)

print("x_test")

print (x_test.shape)

print("y_test")

print (y_test.shape)

Model

Your model wasn't very powerful for this task, so I tried a bigger one (this on the other hand is too powerful)

model = Sequential()

model.add(LSTM(64, return_sequences=True, input_shape=(None, x_train.shape[2])))

model.add(LSTM(128, return_sequences=True))

model.add(LSTM(256, return_sequences=True))

model.add(LSTM(128, return_sequences=True))

model.add(LSTM(64, return_sequences=True))

model.add(LSTM(n_feats, return_sequences=True))

model.compile(loss='mse', optimizer='adam')

Fitting

Notice that I had to train 2000+ epochs for the model to have good results.

I added the validation data so we can compare the loss for train and test.

#notice that I'm predicting from the ENTIRE sequence, including x_train

#is important for the model to adjust its states before predicting the end

model.fit(x_train, y_train, epochs=1000, batch_size=1, verbose=2, validation_data=(x_test,y_test))

Predicting

Important: as for predicting the end of a sequence based on the beginning, it's important that the model sees the beginning to adjust the internal states, so I'm predicting the entire data (x_predict), not only the test data.

y_predict_model = model.predict(x_predict)

print("\ny_predict_true:")

print (y_predict_true.shape)

print("y_predict_model: ")

print (y_predict_model.shape)

def plot(true, predicted, divider):

predict_plot = scaler.inverse_transform(predicted[0])

true_plot = scaler.inverse_transform(true[0])

predict_plot = predict_plot[:,0]

true_plot = true_plot[:,0]

plt.figure(figsize=(16,6))

plt.plot(true_plot, label='True',linewidth=5)

plt.plot(predict_plot, label='Predict',color='y')

if divider > 0:

maxVal = max(true_plot.max(),predict_plot.max())

minVal = min(true_plot.min(),predict_plot.min())

plt.plot([divider,divider],[minVal,maxVal],label='train/test limit',color='k')

plt.legend()

plt.show()

test_size = n_rows - train_size

print("test length: " + str(test_size))

plot(y_predict_true,y_predict_model,train_size)

plot(y_predict_true[:,-2*test_size:],y_predict_model[:,-2*test_size:],test_size)

Showing entire data

Showing the end portion of it for more detail

Please notice that this model is overfitting, it means it can learn the training data and get bad results in test data.

To solve this you must experimentally try smaller models, use dropout layers and other techniques to prevent overfitting.

Notice also that this data very probably contains A LOT of random factors, meaning the models will not be able to learn anything useful from it. As you make smaller models to avoid overfitting, you may also find that the model will present worse predictions for training data.

Finding the perfect model is not an easy task, it's an open question and you must experiment. Maybe LSTM models simply aren't the solution. Maybe your data is simply not predictable, etc. There isn't a definitive answer for this.

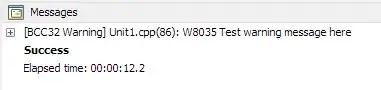

How to know the model is good

With the validation data in training, you can compare loss for train and test data.

Train on 1 samples, validate on 1 samples

Epoch 1/1000

9s - loss: 0.4040 - val_loss: 0.3348

Epoch 2/1000

4s - loss: 0.3332 - val_loss: 0.2651

Epoch 3/1000

4s - loss: 0.2656 - val_loss: 0.2035

Epoch 4/1000

4s - loss: 0.2061 - val_loss: 0.1696

Epoch 5/1000

4s - loss: 0.1761 - val_loss: 0.1601

Epoch 6/1000

4s - loss: 0.1697 - val_loss: 0.1476

Epoch 7/1000

4s - loss: 0.1536 - val_loss: 0.1287

Epoch 8/1000

.....

Both should go down together. When the test data stops going down, but the train data continues to improve, your model is starting to overfit.

Trying another model

The best I could do (but I didn't really try much) was using this model:

model = Sequential()

model.add(LSTM(64, return_sequences=True, input_shape=(None, x_train.shape[2])))

model.add(LSTM(128, return_sequences=True))

model.add(LSTM(128, return_sequences=True))

model.add(LSTM(64, return_sequences=True))

model.add(LSTM(n_feats, return_sequences=True))

model.compile(loss='mse', optimizer='adam')

When the losses were about:

loss: 0.0389 - val_loss: 0.0437

After this point, the validation loss started going up (so training beyond this point is totally useless)

Result:

This shows that all this model could learn was very overall behaviour, such as zones with higher values.

But the high frequency was either too random or the model wasn't good enough for this...