I have the following data points that I would like to curve fit:

import matplotlib.pyplot as plt

import numpy as np

from scipy.optimize import curve_fit

t = np.array([15474.6, 15475.6, 15476.6, 15477.6, 15478.6, 15479.6, 15480.6,

15481.6, 15482.6, 15483.6, 15484.6, 15485.6, 15486.6, 15487.6,

15488.6, 15489.6, 15490.6, 15491.6, 15492.6, 15493.6, 15494.6,

15495.6, 15496.6, 15497.6, 15498.6, 15499.6, 15500.6, 15501.6,

15502.6, 15503.6, 15504.6, 15505.6, 15506.6, 15507.6, 15508.6,

15509.6, 15510.6, 15511.6, 15512.6, 15513.6])

v = np.array([4.082, 4.133, 4.136, 4.138, 4.139, 4.14, 4.141, 4.142, 4.143,

4.144, 4.144, 4.145, 4.145, 4.147, 4.146, 4.147, 4.148, 4.148,

4.149, 4.149, 4.149, 4.15, 4.15, 4.15, 4.151, 4.151, 4.152,

4.152, 4.152, 4.153, 4.153, 4.153, 4.153, 4.154, 4.154, 4.154,

4.154, 4.154, 4.155, 4.155])

The exponential function that I want to fit to the data is:

The Python function representing the above formula and the associated curve fit with the data is detailed below:

def func(t, a, b, alpha):

return a - b * np.exp(-alpha * t)

# scale vector to start at zero otherwise exponent is too large

t_scale = t - t[0]

# initial guess for curve fit coefficients

a0 = v[-1]

b0 = v[0]

alpha0 = 1/t_scale[-1]

# coefficients and curve fit for curve

popt4, pcov4 = curve_fit(func, t_scale, v, p0=(a0, b0, alpha0))

a, b, alpha = popt4

v_fit = func(t_scale, a, b, alpha)

ss_res = np.sum((v - v_fit) ** 2) # residual sum of squares

ss_tot = np.sum((v - np.mean(v)) ** 2) # total sum of squares

r2 = 1 - (ss_res / ss_tot) # R squared fit, R^2

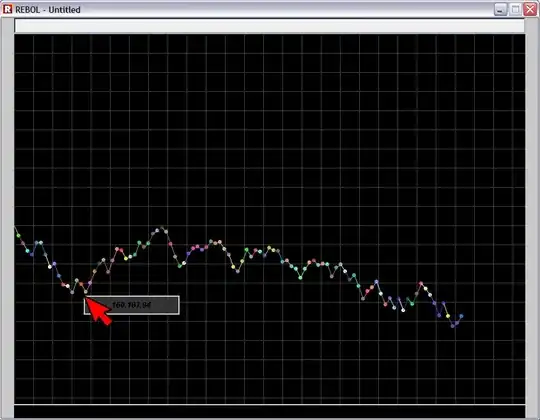

The data compared to the curve fit is plotted below. The parameters and R squared value are also provided.

a0 = 4.1550 b0 = 4.0820 alpha0 = 0.0256

a = 4.1490 b = 0.0645 alpha = 0.9246

R² = 0.8473

Is it possible to get a better fit with the data using the approach outlined above or do I need to use a different form of the exponential equation?

I'm also not sure what to use for the initial values (a0, b0, alpha0). In the example, I chose points from the data but that may not be the best method. Any suggestions on what to use for the initial guess for the curve fit coefficients?