I work with a decision tree algorithm on a binary classification problem and the goal is to minimise false positives (maximise positive predicted value) of the classification (the cost of a diagnostic tool is very high).

Is there a way to introduce a weight in gini / entropy splitting criteria to penalise for false positive misclassifications?

Here for example, the modified Gini index is given as:

Therefore I am wondering if there any way to implement it in Scikit-learn?

EDIT

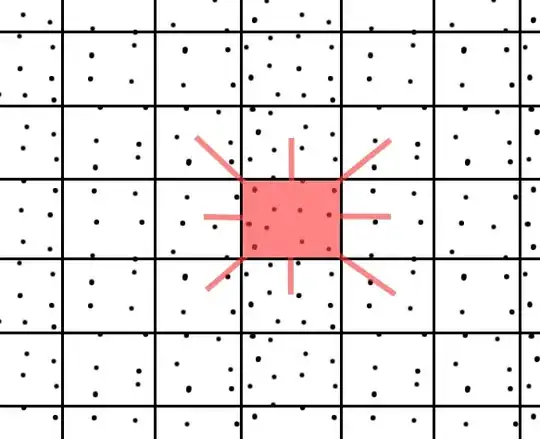

Playing with class_weight produced the following results:

from sklearn import datasets as dts

iris_data = dts.load_iris()

X, y = iris_data.features, iris_data.targets

# take only classes 1 and 2 due to less separability

X = X[y>0]

y = y[y>0]

y = y - 1 # make binary labels

# define the decision tree classifier with only two levels at most and no class balance

dt = tree.DecisionTreeClassifier(max_depth=2, class_weight=None)

# fit the model, no train/test for simplicity

dt.fit(X[:55,:2], y[:55])

plot the decision boundary and the tree Blue are positive (1):

While outweighing the minority class (or more precious):

dt_100 = tree.DecisionTreeClassifier(max_depth=2, class_weight={1:100})