Default Java GC configuration is not optimal for running Java applications in a container as it is explained in detail by Jörg Schad from Mesosphere. Why Mesosphere doesn't apply suggested configuration its container orchestrator remains mystery to me.

By default Java 8 uses ParallelGC (-XX:+UseParallelGC).

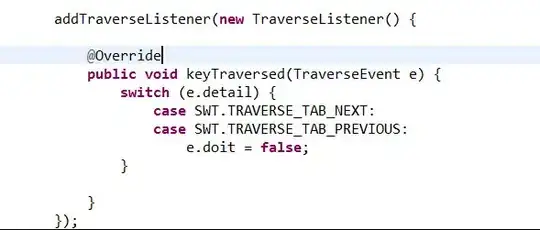

Suggested Java flags:

-Xmx1536m Maximum heap size, depends on your cluster size (number of tasks running via Marathon)-XX:+UseConcMarkSweepGC -XX:+CMSParallelRemarkEnabled As response time is critical for a cluster orchestrator, concurrent collector seems to be best fit. As documentation says:

The Concurrent Mark Sweep (CMS) collector is designed for applications that prefer shorter garbage collection pauses and that can afford to share processor resources with the garbage collector while the application is running.

-XX:+UseParNewGC Disables parallel young generation GC that is automatically enabled with CMS.-XX:ParallelGCThreads=2 Threads are automatically set by number of logical processors available on your machine. This makes GC inefficient especially in case when the physical machine has 12 (or even more) cores and you're limiting CPUs in Mesos. Should equal to number of cpus assigned to Marathon. -XX:+UseCMSInitiatingOccupancyOnly Prevents using set of heuristic rules to trigger garbage collection. That would make GC less predictable and usually tends to delay collection until old generation is almost occupied. Initiating GC in advance allows to complete collection before old generation is full and thus avoid Full GC (i.e. stop-the-world pause). -XX:CMSInitiatingOccupancyFraction=80 Informs Java VM when CMS should be triggered. Basically, it allows to create a buffer in heap, which can be filled with data, while CMS is working. 70-80 seems to be reasonable value. If the value is too small GC will be triggered frequently, if it's large, GC will be trigged too late.-XX:MaxTenuringThreshold=1 Limits copying object to old generation pool. Default for CMS is 4.-XX:+UseCGroupMemoryLimitForHeap -XX:+UnlockExperimentalVMOptions heap would be set according to cgroups settings, useful if we don't set -Xmx1536m. Both flags are need for Java 8 (that is currently used mesosphere/marathon Docker image).

Here's Marathon configuration we're currently using:

"cpus": 2,

"mem": 2304,

"env": {

"JVM_OPTS": "-Xms512m -Xmx1536m -XX:+PrintGCApplicationStoppedTime -XX:+UseConcMarkSweepGC -XX:+CMSParallelRemarkEnabled -XX:+UseParNewGC -XX:+UseCMSInitiatingOccupancyOnly -XX:CMSInitiatingOccupancyFraction=80 -XX:MaxGCPauseMillis=200 -XX:MaxTenuringThreshold=1 -XX:SurvivorRatio=90 -XX:TargetSurvivorRatio=9 -XX:ParallelGCThreads=2 "

},

Here's Marathon behavior on the same cluster after GC tuning: