I am going through the example (below): which is a binary classification example. I want to make sure some of my intuition is correct here as there is not much documentation regarding the lime package due to it being relatively new.

Output of the example

library(xgboost)

library(dplyr)

library(caret)

library(insuranceData) # example dataset https://cran.r-project.org/web/packages/insuranceData/insuranceData.pdf

library(lime) # Local Interpretable Model-Agnostic Explanations

set.seed(123)

data(dataCar)

mydb <- dataCar %>% select(clm, exposure, veh_value, veh_body,

veh_age, gender, area, agecat)

label_var <- "clm"

offset_var <- "exposure"

feature_vars <- mydb %>%

select(-one_of(c(label_var, offset_var))) %>%

colnames()

#preparing data for xgboost (one hot encoding of categorical (factor) data

myformula <- paste0( "~", paste0( feature_vars, collapse = " + ") ) %>% as.formula()

dummyFier <- caret::dummyVars(myformula, data=mydb, fullRank = TRUE)

dummyVars.df <- predict(dummyFier,newdata = mydb)

mydb_dummy <- cbind(mydb %>% select(one_of(c(label_var, offset_var))),

dummyVars.df)

rm(myformula, dummyFier, dummyVars.df)

feature_vars_dummy <- mydb_dummy %>% select(-one_of(c(label_var, offset_var))) %>% colnames()

xgbMatrix <- xgb.DMatrix(

data = mydb_dummy %>% select(feature_vars_dummy) %>% as.matrix,

label = mydb_dummy %>% pull(label_var),

missing = "NAN")

#model 2 : this works

myParam2 <- list(max.depth = 2,

eta = .01,

gamma = 0.001,

objective = 'binary:logistic',

eval_metric = "logloss")

booster2 <- xgb.train(

params = myParam2,

data = xgbMatrix,

nround = 50)

explainer <- lime(mydb_dummy %>% select(feature_vars_dummy),

model = booster2)

explanation <- explain(mydb_dummy %>% select(feature_vars_dummy) %>% head,

explainer,

n_labels = 2, ###### NOTE: I added this value, not sure if it should be '=1' or '=2' for binary classification.

n_features = 2)

plot_features(explanation)

The above code talks about insurance claims which is a classification problem, claim or not claim.

Questions:

What is the function of n_labels - I have a binary classification for my own problem so would n_lables correspond to 0 and 1?

In the example here the author talks about malignant and benign for the labels. However when I run the code on my own classification problem (I make sure that there is 0 and 1 observations in the data that I plot for plot_features(explanation), but the labels do not match the true value of what that observation is...

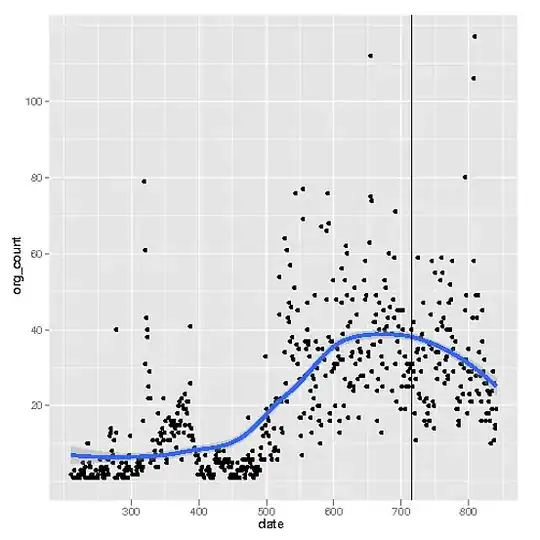

For my own problem I have the following  ;

;

I set n_labels = 1 (this plot is different to the code above (but still a classification problem)).

Here in case 2 I have under the labels header a result of 1 - which, can I assume is a binary classification prediction? However when I output the actual true results of the binary output I have the following 1 0 1 0 1 0 which I am reading that the model predicted that case 1 was classified as 0 and in actual fact it was a 1.case 2 prediction was a 1 and in fact was a 0, case 3 was predicted to be a 0 and infact it was a 1, case 4 was predicted to be a 0 and it was actually a 0 etc... is this incorrect? btw I used an xgb model to make predictions.

Secondly; All the 0 cases in the plot (so cases 1, 3, 4, 5, 6) all have similar characteristics... whereas case 2 is different and it has other variables/features which affect the model (I only plot 4 variables from the model (again I do not know if they are plotted randomly or by some importance score)).

I quote my analysis from the Understading lime here

In this overview it is clear to see how case 195 and 416 behave alike, while the third benign case (7) has an unusual large bare nuclei which are detracting from its status as benign without affecting the final prediction (indicating that the values of its other features are making up for this odd one). To no surprise it is clear that high values in the measurements are indicative of a malignant tumor.

If somebody can give me some sort of intuition/analys of the above plot it would be a great step in the right direction for me.