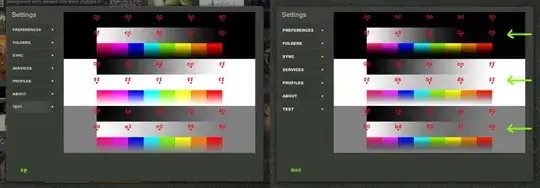

There are 3 backgrounds in the below image: black white & grey

There are 3 bars on each one: black -> transparent, white -> transparent, and colors -> transparent

I am using glBlendFunc(GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA); and all my vertex colors are 1,1,1,0.

The defect is really visible in the white->transparent on white background.

On Windows XP (and other windows flavors), it works perfectly, and I get fully white. On the Mac however, I get grey in the middle!

What would cause this, and why would it get darker when I'm blending white on white?

Screenshot full size is @ http://dl.dropbox.com/u/9410632/mac-colorbad.png

Updated info:

On Windows, it doesnt seem to matter about opengl version. 2.0 to 3.2, all work. On the Mac I have in front of me now, it's 2.1.

The gradients were held in textures, and all the vertexes are colored 1,1,1,1 (white rgb, full alpha). The backgrounds are just 1x1 pixel textures (atlased with the gradients) and the vertexes are colored as needed, with full alpha.

The atlas is created with glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, w, h, 0, GL_BGRA, GL_UNSIGNED_BYTE, data); It comes from a ARGB dds file that I composed myself.

I should also note that everything is drawn using a trivally simple shader:

uniform sampler2D tex1;

uniform float alpha;

void main() {

gl_FragColor = gl_Color * texture2D(tex1, gl_TexCoord[0].st) * vec4(1.0, 1.0, 1.0, alpha);

}

the alpha uniform is set to 1.0

Now, I did try to change it so the white gradient was not a texture, but just 4 vertexes where the left ones were solid white and opaque, and the right ones were 1,1,1,0, and that worked!

I have triple checked the texture now, and it is only white, with varying alpha 1.0->0.0.

I'm thinking this may be a defaults issue. The version of opengl or the driver may initialize things differently.

For example, I recently found that everyone has GL_TEXTURE_2D glEnabled by default, but not the Intel GME965.

SOLUTION FOUND

First, a bit more background. This program is actually written in .NET (using Mono on OS X), and the DDS file I'm writing is an atlas automatically generated by compacting a directory of 24 bit PNG files into the smallest texture it can. I am loading those PNGs using System.Drawing.Bitmap and rendering them into a larger Bitmap after determining the layout. That post-layout Bitmap is then locked (to get it's at its bytes), and those are written out to a DDS by code I wrote.

Upon reading Bahbar's advise, I checked out the textures in memory and they were indeed different! My DDS loaded seems to be the culprit and not any OpenGL settings. On a hunch today, I checked out the DDS file itself on the two platforms (using a byte for byte comparison), and indeed, they were different! When I load up the DDS files using WTV ( http://developer.nvidia.com/object/windows_texture_viewer.html ), they looked identical. However, using WTV, you can turn off each channel (R G B A). When I toggled off the Alpha channel, on windows I saw a really bad looking image. No alpha would lead to no antialiased edges, so of course it would look horrible. When I turned off the alpha channel on the OSX DDS, it looked fine!

The PNG loader in Mono is premultiplying, causing all my issues. I entered a ticket for them ( https://bugzilla.novell.com/show_bug.cgi?id=679242 ) and have switched to directly using libpng.

Thanks everyone!