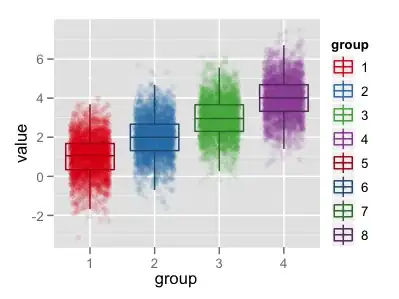

I am currently using sklearn's Logistic Regression function to work on a synthetic 2d problem. The dataset is shown as below:

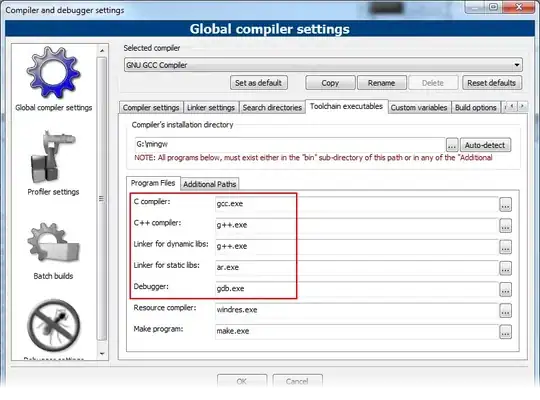

I'm basic plugging the data into sklearn's model, and this is what I'm getting (the light green; disregard the dark green):

The code for this is only two lines; model = LogisticRegression(); model.fit(tr_data,tr_labels). I've checked the plotting function; that's fine as well. I'm using no regularizer (should that affect it?)

It seems really strange to me that the boundaries behave in this way. Intuitively I feel they should be more diagonal, as the data is (mostly) located top-right and bottom-left, and from testing some things out it seems a few stray datapoints are what's causing the boundaries to behave in this manner.

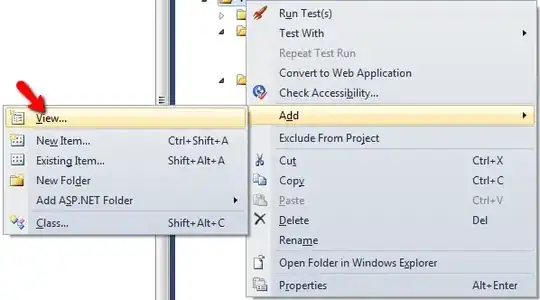

For example here's another dataset and its boundaries

Would anyone know what might be causing this? From my understanding Logistic Regression shouldn't be this sensitive to outliers.