Context: I'm using a fully convolutional network to perform image segmentation. Typically, the input is an RGB image shape = [512, 256] and the target is a 2 channels binary mask defining the annotated regions (2nd channel is the opposite of the fist channel).

Question: I have the same CNN implementation using Tensorflow and Keras. But the Tensorflow model doesn't start learning. Actually, the loss even grows with the number of epochs! What is wrong in this Tensorflow implementation that prevents it from learning?

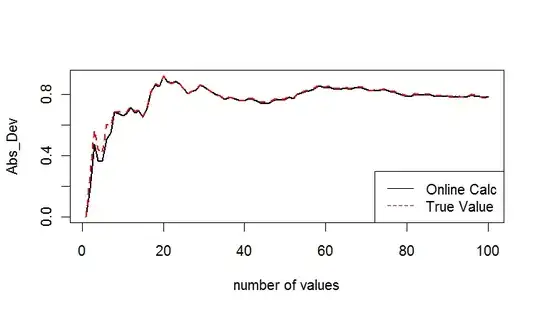

Setup: The dataset is split into 3 subsets: training (78%), testing (8%) and validation (14%) sets which are fed to the network by batches of 8 images. The graphs show the evolution of the loss for each subsets. The images show the prediction after 10 epoch for two different images.

Tensorflow implementation and results

import tensorflow as tf

tf.reset_default_graph()

x = inputs = tf.placeholder(tf.float32, shape=[None, shape[1], shape[0], 3])

targets = tf.placeholder(tf.float32, shape=[None, shape[1], shape[0], 2])

for d in range(4):

x = tf.layers.conv2d(x, filters=np.exp2(d+4), kernel_size=[3,3], strides=[1,1], padding="SAME", activation=tf.nn.relu)

x = tf.layers.max_pooling2d(x, strides=[2,2], pool_size=[2,2], padding="SAME")

x = tf.layers.conv2d(x, filters=2, kernel_size=[1,1])

logits = tf.image.resize_images(x, [shape[1], shape[0]], align_corners=True)

prediction = tf.nn.softmax(logits)

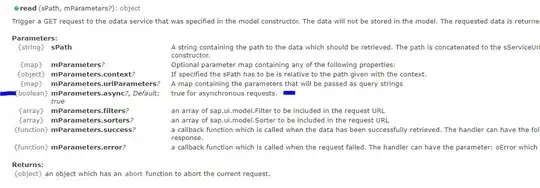

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=targets, logits=logits))

optimizer = tf.train.RMSPropOptimizer(learning_rate=0.001).minimize(loss)

sess = tf.Session()

sess.run(tf.global_variables_initializer())

def run(mode, x_batch, y_batch):

if mode == 'TRAIN':

return sess.run([loss, optimizer], feed_dict={inputs: x_batch, targets: y_batch})

else:

return sess.run([loss, prediction], feed_dict={inputs: x_batch, targets: y_batch})

Keras implementation and reslults

import keras as ke

ke.backend.clear_session()

x = inputs = ke.layers.Input(shape=[shape[1], shape[0], 3])

for d in range(4):

x = ke.layers.Conv2D(int(np.exp2(d+4)), [3,3], padding="SAME", activation="relu")(x)

x = ke.layers.MaxPool2D(padding="SAME")(x)

x = ke.layers.Conv2D(2, [1,1], padding="SAME")(x)

logits = ke.layers.Lambda(lambda x: ke.backend.tf.image.resize_images(x, [shape[1], shape[0]], align_corners=True))(x)

prediction = ke.layers.Activation('softmax')(logits)

model = ke.models.Model(inputs=inputs, outputs=prediction)

model.compile(optimizer="rmsprop", loss="categorical_crossentropy")

def run(mode, x_batch, y_batch):

if mode == 'TRAIN':

loss = model.train_on_batch(x=x_batch, y=y_batch)

return loss, None

else:

loss = model.evaluate(x=x_batch, y=y_batch, batch_size=None, verbose=0)

prediction = model.predict(x=x_batch, batch_size=None)

return loss, prediction

There must be a difference between the two but my understanding of the documentation lead me nowhere. I would be really interested to know where the difference lies. Thanks in advance!