Oracle gurus,

We are at a point to decide the best approach to design a table with 500 columns wide vs a table with 8 columns wide but 4 billion rows deep. The table is going to be updated once a week, every Sunday with addition of a new week's (latest week in the past) data to the table. Since the data varies by the week number (fiscal), we have two groups of thoughts for the pros and cons on the above design -

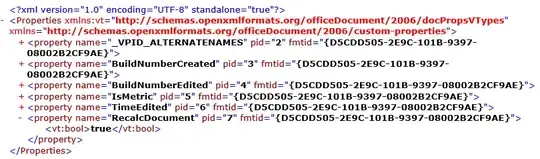

For the wide table - the thought is to design a table containing a 3-attribute columns for each week number all the way back for 160 weeks in the past. So that gives us a 160 x 3 = 480 columns wide. The idea is that every week when we add the data for the last week to the table, we will drop the oldest week columns from the table and will add the latest week columns to the table. This table will have approximately 40 million rows in it based on keys defined on ColA - ColD (pls refer to the picture below). Here's the example -

For the deep table - the ColA - ColD fields remain the same, except the fact that there is a new week column that varies by the key defined on ColA-ColD. When we build this table the idea is to stick only the latest week to the table with the appropriate week number and to have a separate purge (maintenance) process to remove the oldest week rows from the table. This table will have approximately 4 billion rows and 8 columns wide. Here's a sample on how it could look like -

We absolutely do understand the need to do partition here by the weeknumbers to either of the tables, whichever ones we pick. Use of the table - The table is going to be queried multiple times by concurrent users for a matching week number and ColA values for past 52 weeks and the expectation is to create a report out of it in less than 5 mins. I'am seeking advice from the Oracle Gurus here, whether you have in your experience seen a table as wide as having nearly 500 columns with the dropping or adding of columns every week as we build data to the table and how does it impact the performance for a highly concurrent report generation tool. Conversely, if you have worked with a table as deep as having 4 billion rows (but the columns do not change on a weekly basis) and what are the performance implications of a concurrent reporting process using this table.

Thank You and much appreciate for your time!! Brendon