- I downloaded and started authoring environment (crafter-cms-authoring.zip)

- Created site backed by remote git repo as described in: Create site based on a blueprint then push to remote bare git repository

- Created a content type, new page.

- Published everything

Now, I would expect, that I can see my changes in the remote repo. But all I can see are the initial commits from the 2. step above. No new content type, no new page, no branch "live". (The content items are however visible in the local repo)

What is missing?

Edit: Since Creafter can by set up in many ways, in order to clarify my deployment scenario, I am adding deployment diagram + short description.

There are 3 hosts - one for each environment + shared git repo.

There are 3 hosts - one for each environment + shared git repo.

Authoring

This is where studio is located and content authors make changes. Each change is saved to the sandbox local git repository. When a content is published, the changes are pulled to the published local git repository. These two local repos are not accessible from other hosts.

Delivery

This is what provides published content to the end user/application.

Deployer is responsible for getting new publications to the delivery instance. It does so by polling (periodically pulling from) specific git repository. When it pulls new changes, it updates the local git repository site, and Solr indexes.

Gitlab

This hosts git repository site. It is accessible from both - Authoring and Delivery hosts. After its creation, the new site is pushed to this repo. The repo is also polled for new changes by Deployers of Delivery instances.

In order for this setup to work, the published changes must somehow end up in Gitlab's site repo, but they do not (the red communication path from Authoring Deployer to the Gitlab's site)

Solution based on @summerz answer

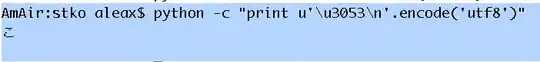

I implemented GitPushProcessor and configured new deployment target in authoring Deployer, adding mysite-live.yaml to /opt/crafter-cms-authoring/data/deployer/target/:

target:

env: live

siteName: codelists

engineUrl: http://localhost:9080

localRepoPath: /opt/crafter-cms-authoring/data/repos/sites/mysite/published

deployment:

pipeline:

- processorName: gitPushProcessor

remoteRepo:

url: ssh://path/to/gitlab/site/mysite