I'm trying to train my own Haar Cascade classifier for apple according to this article. I collected 1000 positive images and 500 negative images from the internet. Each image has different sizes and I cropped the images to create "info.txt" file. While I creating samples like this,

createsamples.exe -info positive/info.txt -vec vector/applevector.vec -

num 1000 -w 24 -h 24

there is some parameters -w and -h. What does it mean ? Should I resize all my positive and negative images ? I tried to train my classifier with default parameters (-w 24 and -h 24) but accuracy of my classifier is so weak. Can it be related with this parameters ? Thank you for advice.

UPDATE

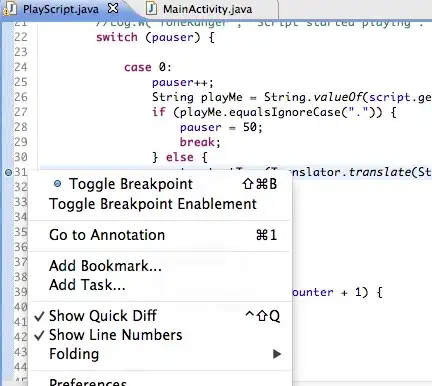

There is some examples of my positive images. I collected them from the internet.