This seems like it would be simple, but I can't get things to work. I 100 dimension vector spaces and I have several vectors in each space that are matched. I want to find the transformation matrix (W) such that:

a_vector[0] in vector space A x W = b_vector[0] in vector space B (or approximation).

So a paper mentions the formula for this.

So no bias term, no activation that we typically see.

I've tried using sklearns Linear Regression without much success.

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error

regression_model = LinearRegression(fit_intercept=True)

regression_model.fit(X_train, y_train)

regression_model.score(X_test, y_test)

> -1451478.4589335269 (!!???)

y_predict = regression_model.predict(X_test)

regression_model_mse = mean_squared_error(y_predict, y_test)

regression_model_mse = 524580.06

Tried tensorflow without much success. Don't care about the tool - tensorflow, sklearn - just looking for help with the solutions.

Thanks.

EDIT

so I hand rolled the code below - maxing for cosine sim (representing how close the predicted points are to the real points - 1.00 = perfect match) - but it is VERY SLOW.

shape = (100,100)

W1 = np.random.randn(*shape).astype(np.float64) / np.sqrt(sum(shape))

avgs = []

for x in range(1000):

shuffle(endevec)

distance = [0]

for i,x in enumerate(endevec):

pred1 = x[0].dot(W1)

cosine = 1 - scipy.spatial.distance.cosine(pred1, x[1])

distance.append(cosine)

diff = pred1 - x[0]

gradient = W1.T.dot(diff) / W1.shape[0]

W1 += -gradient * .0001

avgs.append(np.mean(distance))

sys.stdout.write('\r')

# the exact output you're looking for:

sys.stdout.write(str(avgs[-1]))

sys.stdout.flush()

EDIT 2

Jeanne Dark below had a great answer for finding the transformation matrix using: M=np.linalg.lstsq(source_mtrx[:n],target_mtrx[:n])[0]

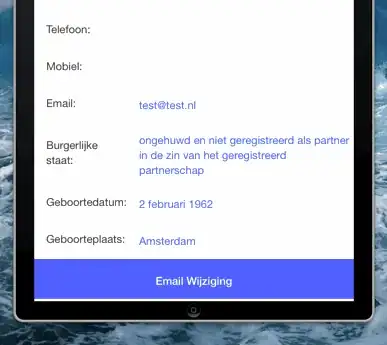

On my dataset of matched vecs, the predicted vecs using the TM found with this method was:

minmax=(-0.09405095875263214, 0.9940633773803711)

mean=0.972490919224675 (1.0 being a perfect match)

variance=0.0011325349465895844

skewness=-18.317443753033665

kurtosis=516.5701661370497

Had tiny amount of really big outliers.

The plot of cosine sim was: