Do You have any suggestion for this?

I am following the steps in one research paper to re-implement it for my specific problem. I am not a professional programmer, however, I am struggling a lot, more than one month.When I am reaching to scoring step with generalized Hough transform, I do not get any result and what I am getting is a blank image without finding the object center. What I did includes the following steps:

I define a spatially constrained area for a training image and extract SIFT features within the area. The red point in the center represents the object center in template(training) image.

and this the interest point extracted by SIFT in query image:

Keypoints are matched according to the some conditions: 1)they should be quantized to the same

visual wordand be spatially consistent. So I get the following points after matching conditions:

- I have 15 and 14 points for template and query images, respectively. I send these points along with template image center of object coordinate to generalized hough transform (the code that I found from github). the code is working properly for it default images. However, according to the few points that I am getting by the algorithm, I do not know what I am doing wrong?!

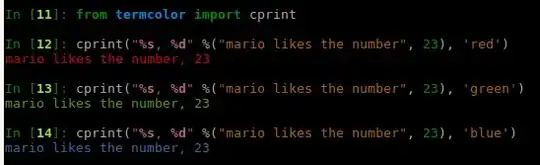

I thought maybe that is because of theta calculation, so I changed this line to return abs of y and x differences. But it did not help.

In line 20 they only consider 90 degrees for binning, Could I ask what is the reason and how can I define a binning according to my problem and range of angles of rotation around the center of an object?

- Does binning range affect the center calculation?

I really appreciate it of you let me know what I am doing wrong here.