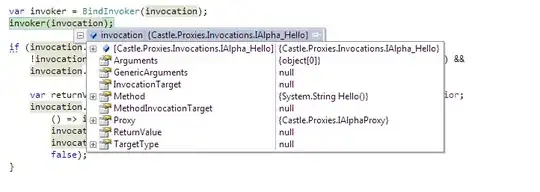

def mystery11(n):

if n < 1: return n

def mystery12(n):

i = 1

while i < n:

i *= 2

return i

return mystery11(n/2) + mystery11(n/2) + mystery12(n-2)

I have a question about the code above. I completely understand that without the last recursive call to mystery12, the runtime of the code (mystery11) would be theta(n). But I don't believe that at each level theta(log(n)) work is being done.

At the first level, we do log(n), at the next level, we do 2log(n/2), then 4log(n/4)... but that doesn't look like log(n) at each level (it feels closer to 2log(n) at the second level and 4log(n) at the third level etc.)

I've also tried Wolfram Alpha, and I just get no solutions exist. But works fine without the log(n) term.

So, is this solution correct theta(nlog(n))? And if not, what is the actual solution?

Ps. Apologies if anything in my post is not etiquette, this is my second time posting on Stackoverflow. Post a comment and I will fix it.