I already have a facial landmark detector and can already save the image using opencv and dlib with the code below:

# import the necessary packages

from imutils import face_utils

import numpy as np

import argparse

import imutils

import dlib

import cv2

# construct the argument parser and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-p", "--shape-predictor", required=True, help="Path to facial landmark predictor")

ap.add_argument("-i", "--image", required=True, help="Path to input image")

args = vars(ap.parse_args())

# initialize dlib's face detector (HOG-based) and then create the facial landmark predictor

detector = dlib.get_frontal_face_detector()

predictor = dlib.shape_predictor(args["shape_predictor"])

# load the input image, resize it, and convert it to grayscale

image = cv2.imread(args["image"])

image = imutils.resize(image, width=500)

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

# detect faces in the grayscale image

rects = detector(gray, 1)

for (i, rect) in enumerate(rects):

# determine the facial landmarks for the face region, then

# convert the landmark (x, y)-coordinates to a NumPy array

shape = predictor(gray, rect)

shape = face_utils.shape_to_np(shape)

# loop over the face parts individually

print(face_utils.FACIAL_LANDMARKS_IDXS.items())

for (name, (i, j)) in face_utils.FACIAL_LANDMARKS_IDXS.items():

print(" i = ", i, " j = ", j)

# clone the original image so we can draw on it, then

# display the name of the face part of the image

clone = image.copy()

cv2.putText(clone, name, (10, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 0, 255), 2)

# loop over the subset of facial landmarks, drawing the

# specific face part using a red dots

for (x, y) in shape[i:j]:

cv2.circle(clone, (x, y), 1, (0, 0, 255), -1)

# extract the ROI of the face region as a separate image

(x, y, w, h) = cv2.boundingRect(np.array([shape[i:j]]))

roi = image[y:y+h,x:x+w]

roi = imutils.resize(roi, width=250, inter=cv2.INTER_CUBIC)

# show the particular face part

cv2.imshow("ROI", roi)

cv2.imwrite(name + '.jpg', roi)

cv2.imshow("Image", clone)

cv2.waitKey(0)

# visualize all facial landmarks with a transparent overly

output = face_utils.visualize_facial_landmarks(image, shape)

cv2.waitKey(0)

I have Arnold's face and I save part of his face using opencv imwrite.

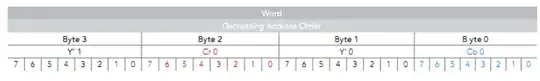

What I'm trying to achieve is to get the image of the jaw only and I don't want to save the neck part. See the image below:

Does anyone has an idea on how I can remove the other parts, except the jaw detected by dlib.