I have the following likelihood function which I used in a rather complex model (in practice on a log scale):

library(plyr)

dcustom=function(x,sd,L,R){

R. = (log(R) - log(x))/sd

L. = (log(L) - log(x))/sd

ll = pnorm(R.) - pnorm(L.)

return(ll)

}

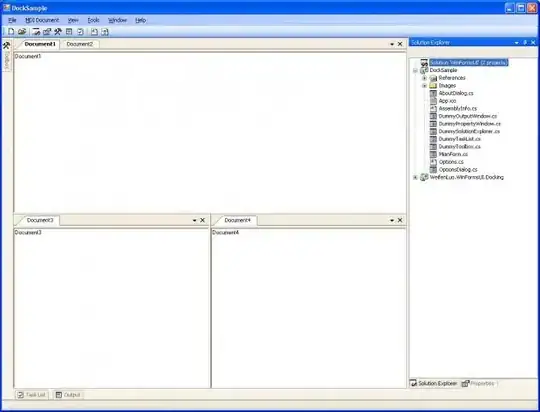

df=data.frame(Range=seq(100,500),sd=rep(0.1,401),L=200,U=400)

df=mutate(df, Likelihood = dcustom(Range, sd,L,U))

with(df,plot(Range,Likelihood,type='l'))

abline(v=200)

abline(v=400)

In this function, the sd is predetermined and L and R are "observations" (very much like the endpoints of a uniform distribution), so all 3 of them are given. The above function provides a large likelihood (1) if the model estimate x (derived parameter) is in between the L-R range, a smooth likelihood decrease (between 0 and 1) near the bounds (of which the sharpness is dependent on the sd), and 0 if it is too much outside.

This function works very well to obtain estimates of x, but now I would like to do the inverse: draw a random x from the above function. If I would do this many times, I would generate a histogram that follows the shape of the curve plotted above.

The ultimate goal is to do this in C++, but I think it would be easier for me if I could first figure out how to do this in R.

There's some useful information online that helps me start (http://matlabtricks.com/post-44/generate-random-numbers-with-a-given-distribution, https://stats.stackexchange.com/questions/88697/sample-from-a-custom-continuous-distribution-in-r) but I'm still not entirely sure how to do it and how to code it.

I presume (not sure at all!) the steps are:

- transform likelihood function into probability distribution

- calculate the cumulative distribution function

- inverse transform sampling

Is this correct and if so, how do I code this? Thank you.