I am working on a descriptive (NOT predictive) analysis whereby I wish to compare the magnitudes of the coefficients from a logistic regression type problem - including the intercept. As each variable needs to be described, I have tried standard glm logit regression, and knowing that many variables are at least partially correlated, I am also trying out ridge regression to see how it differs.

The issue I have is that all guides I've seen recommend identifying coefficients at lambda.min or lambda.1se, however for me, the coefficients at this value of lambda are all zeroes. I can arbitrarily select a lambda to return values, but I don't know that this is correct.

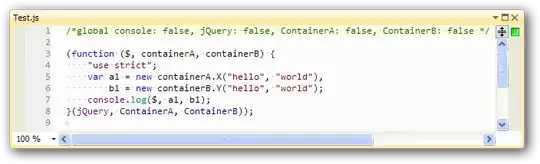

require(glmnet)

CT.base <- readRDS('CTBaseObj.rds') #readRDS data objects

regular <- glm(Flag ~ . - Occurrences , family = binomial(link="logit"),

data = CT.base, weights = Occurrences, maxit = 50)

#Ridge

x <- model.matrix(Flag ~ . - Occurrences, CT.base)

x <- x[, !colnames(x) %in% '(Intercept)']

y <- CT.base$Flag

w <- CT.base$Occurrences

CT.cv <- cv.glmnet(x, y , family = "binomial",

weights = w, alpha = 0.0, parallel = T, type.measure = "class")

plot(CT.cv)

#CT.reg <- coef(CT.cv, s=CT.cv$lambda.1se) # coefficients here are zero

CT.reg <- coef(CT.cv, s=-3) # Looks like an interesting value!?

CT.reg <- data.frame(name = CT.reg@Dimnames[[1]][CT.reg@i+1], coefficient = CT.reg@x)

I've linked the data set behind this for reproducibility (https://drive.google.com/open?id=1YMkY-WWtKSwRREqGPkSVfsURaImItEiO) but this may not be necessary! Any advice gladly received.

Thanks.