As stated in RFC 2435 section 3.1.5, the dimensions of a jpeg image are stored as an 8-pixel multiple for RTP packets, however this is not the case for the actual jpeg file.

How should I accommodate for images whose dimensions are not a multiple of 8? Should the image be re-sized to the nearest multiple or should I increase the value stored by one?

The latter option seems most reasonable in terms of accurately streaming the original image, but would this lead to segment dislocation or a stretched image? Or even just a strip of nonsense pixels?

Edit:

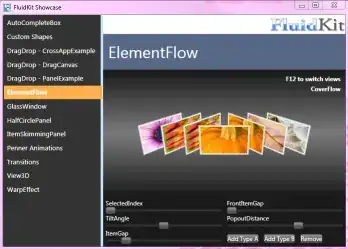

Here is the original image:

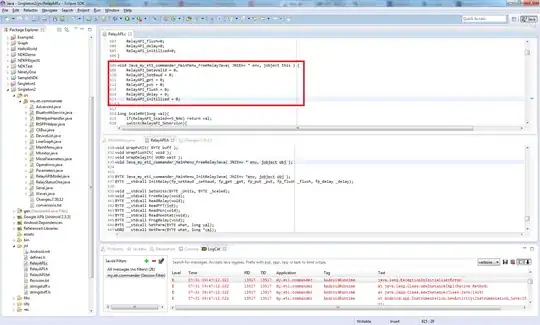

Here is a screenshot from VLC without increasing the dimension values by one:

Here is a screenshot after increasing the dimension values to account for excess pixels:

As you can see, there is segment dislocation on each of them, but manifesting in a different manner! On top of this, there is clearly an issue with quantization tables - although that may be a side-effect of the segment dislocation.

What is a proper solution for dealing with the excess pixels? What is the cause of the segment dislocation?