Trying some marketing analytics. Using R and SQL.

This dataset:

user_id install_date app_version

1 000a0efdaf94f2a5a09ab0d03f92f5bf 2014-12-25 v1

2 000a0efdaf94f2a5a09ab0d03f92f5bf 2014-12-25 v1

3 000a0efdaf94f2a5a09ab0d03f92f5bf 2014-12-25 v1

4 000a0efdaf94f2a5a09ab0d03f92f5bf 2014-12-25 v1

5 000a0efdaf94f2a5a09ab0d03f92f5bf 2014-12-25 v1

6 002a9119a4b3dfb05e0159eee40576b6 2015-12-29 v2

user_session_id event_timestamp app time_seconds

1 f3501a97f8caae8e93764ff7a0a75a76 2015-06-20 10:59:22 draw 682

2 d1fdd0d46f2aba7d216c3e1bfeabf0d8 2015-05-04 18:06:54 build 1469

3 b6b9813985db55a4ccd08f9bc8cd6b4e 2016-01-31 19:27:12 build 261

4 ce644b02c1d0ab9589ccfa5031a40c98 2016-01-31 18:44:01 draw 195

5 7692f450607a0a518d564c0a1a15b805 2015-06-18 15:39:50 draw 220

6 4403b5bc0b3641939dc17d3694403773 2016-03-17 21:45:12 build 644

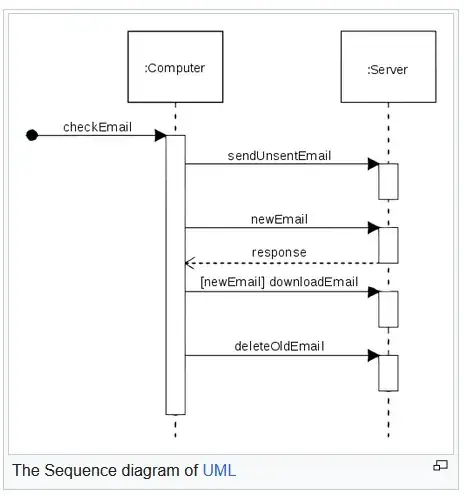

I want to create a plot that looks like this:

but showcases it per version like this (just focus on the versions part of this graph - so like the above picture but 1 time per version like the one below):

Basically, shows the percentage of retention and churn throughout months per version. This is what i have done so far:

ee=sqldf("select user_id, count(user_id)as n ,strftime('%Y-%m', event_timestamp) as dt ,app_version

from w

group by user_id ,strftime('%Y-%m', event_timestamp),app_version

having count(*)>1 order by n desc")

ee

user_id n dt app_version

1 fab9612cea12e2fcefab8080afa10553 238 2015-11 v2

2 fab9612cea12e2fcefab8080afa10553 204 2015-12 v2

3 121d81e4b067e72951e76b7ed8858f4e 173 2016-01 v2

4 121d81e4b067e72951e76b7ed8858f4e 169 2016-02 v2

5 fab9612cea12e2fcefab8080afa10553 98 2015-10 v2

The above, shows the unique users that used the app more than once.So these are the population that the retention rate analysis is referring.

What i am having difficulties with is summarizing through time each of the user_id through their events in event_timestamp column to find the retention / churn outcome like the first image i mentioned.