I'm trying to make thousands of GET requests in the smallest amount of time possible. I need to do so in a scalable way: doubling the number of servers I use to make the requests should halve the time to complete for a fixed number of URLs.

I'm using Celery with the eventlet pool and RabbitMQ as the broker. I'm spawning one worker process on each worker server with --concurrency 100 and have a dedicated master server issuing tasks (the code below). I'm not getting the results I expect: the time to complete is not reduced at all when doubling the number of worker servers used.

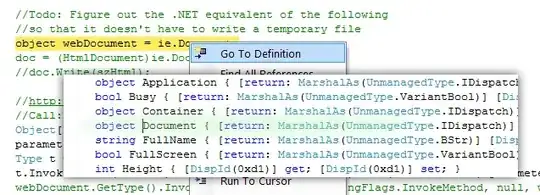

It appears as though as I add more worker servers, the utilization of each worker goes down (as reported by Flower). For example, with 2 workers, throughout execution the number of active threads per worker hovers in the 80 to 90 range (as expected, since concurrency is 100). However, with 6 workers, the number of active threads per worker hovers in the 10 to 20 range.

It's almost like the queue size is too small, or worker servers can't pull tasks off of the queue fast enough to be fully utilized and as you add more workers they have a harder time pulling tasks off the queue quickly.

urls = ["https://...", ..., "https://..."]

tasks = []

num = 0

for url in urls:

num = num + 1

tasks.append(fetch_url.s(num, url))

job = group(tasks)

start = time.time()

res = job.apply_async()

res.join()

print time.time() - start

Update: I have attached a graph of the succeeded tasks vs. time when using 1 worker server, 2 worker servers, etc. up to 5 worker servers. As you can see, the rate of task completion doubles going from 1 worker server to 2 worker servers, but as I add on more servers, the rate of task completion begins to level off.