I am working with SSIS 2014. I have several dozens of millions of rows coming from Attunity Oracle Source component, which its source query has 20 columns and it is time consuming. For some reason, I need to insert 2 columns of the same query in another table which has reference to the first table.

I would like to avoid a second calling to the query in another DataFlowTask, so I was thinking about creating a WAIT till x_01 finishes Script Component task, which will validate when the x_01 OleDB Destination finishes, for then proceed with inserting in the second table.

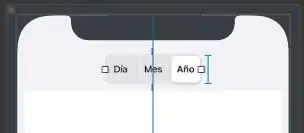

Kindly understand what I want to achieve here:

I was thinking about using a x_01_Finished Package Scope variable and changing its value when PostExecute event is fired from x_01, but unfortunately the PostExecute is not available at x_01 OleDB Destination level.

Question 1:

Is there a way to get the status of some other component, inside a Script Component code?

Something like the following:

public override void PreExecute() {

base.PreExecute();

int sec = 5; //number of seconds to check the status of x_01 Component

int ms = sec * 1000;

bool lbo_x01_Finished = false;

do {

System.Threading.Thread.Sleep(ms);

lbo_x01_Finished = IsComponent_Finished("x01"); //returns true when x01 component finished

} while(!lbo_x01_Finished);

}

Question 2:

Do you think the package performance will be highly affected using this tecnique?

UPDATE 1

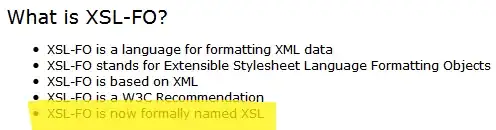

Already tried the Nick.McDermaid's suggestion with a really tiny amount of data. I created 2 temporary tables, one with the PK in the first column and the second table with a FK to this PK of the first table. Even with this small amount of data I got this error: The INSERT statement conflicted with the FOREIGN KEY constraint "FK_tmp_1_tmp_2". You can see the in the following screenshot that insert indeed is really fast, and in the second chunk of data, the x_02 object intended to insert before the first one (and it was not possible due to this FK).

So, my next idea is sleeping some miliseconds while reading just the first row before inserting on the second table. We will see if this can helps.