I've made a cellular automaton (Langton's ant FYI) on VBA. At each step, there is a Sleep(delay) where delay is a variable. I've also added DoEvents at the end of a display function to ensure that each step is shown on screen.

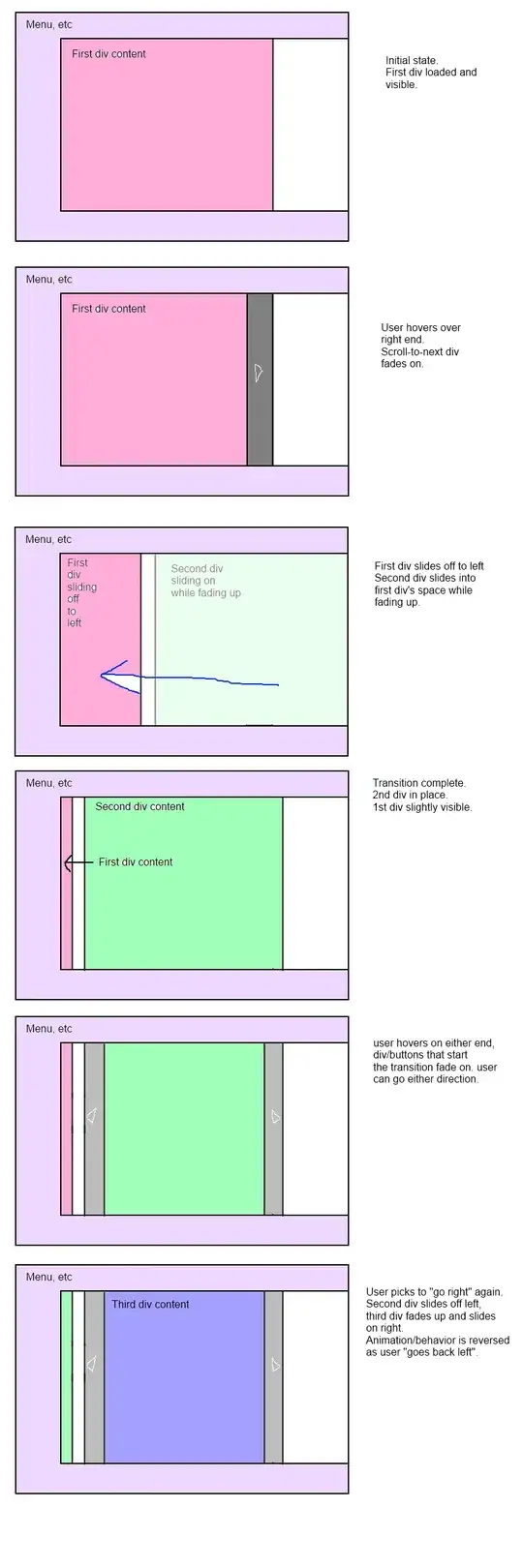

With a Timer I can monitor how long one step require in average. The result is plotted on the graph bellow (Y-axis : Time per step (in ms). X-axis : delay (in ms))

Could you explain to me why it looks like that? Especially why does it remain steady ? Because IMO, I'm suppose to have (more or less) a a straight line. I got these results whitout doing anything else on my computer during the whole process.

Thank you in advance for your help,