I am very new to the whole Lambda, AWS, step functions and Redshift. But I think I've pinpointed a problem that was given to me to investigate.

The step function invokes a lambda node js code to do a copy from S3 into Redshift.

Relevant step definitions are as shown below

"States": {

...

"CopyFiles": {

"Type": "Task",

"Resource": "ARN:activity:CopyFiles",

"ResultPath": "...",

"Retry": [

{

"ErrorEquals": ["Error"],

"MaxAttempts": 0

},

{

"ErrorEquals": [

"States.ALL"

],

"IntervalSeconds": 60,

"BackoffRate": 2.0,

"MaxAttempts": 3

}

],

"Catch": [

{

"ErrorEquals": [

"States.ALL"

],

"ResultPath": "$.errorPath",

"Next": "ErrorStateHandler"

}

],

"Next": "SuccessStep"

},

"SuccessStep": {

"Type": "Task",

"Resource": "ARN....",

"ResultPath": null,

"Retry": [

{

"ErrorEquals": ["Error"],

"MaxAttempts": 0

},

{

"ErrorEquals": [

"States.ALL"

],

"IntervalSeconds": 60,

"BackoffRate": 2.0,

"MaxAttempts": 3

}

],

"End": true

},

The SQL statements (used in CopyFiles activity) are wrapped in a transaction by

"BEGIN;

CREATE TABLE "tempTable_datetimestamp_here" (LIKE real_table);

COPY tempTable_datetimestamp_here from 's3://bucket/key...' IGNOREHEADER 1 COMPUPDATE OFF STATUPDATE OFF';

DELETE FROM toTable

USING tempTable_datetimestamp_here

WHERE toTable.index = tempTable_datetimestamp_here.index;

INSERT INTO toTable SELECT * FROM tempTable_datetimestamp_here;

END;

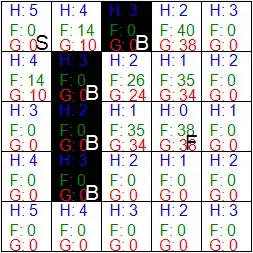

when I put through multiple files (50) at the same time, all the step functions hang (keep running until I abort), please see screenshot  . If I put a single file through then it works fine.

. If I put a single file through then it works fine.

select pid, trim(starttime) as start,

duration, trim(user_name) as user,

query as querytxt

from stv_recents

where status = 'Running';

returns nothing anymore. However, the step functions are still showing as "Running".

Anyone please show me what I need to do to get this working? Thanks Tim