================= UPDATED answer

This can now be solved using the get_subdendrograms from dendextend.

# needed packages:

# install.packages(gplots)

# install.packages(viridis)

# install.packages(devtools)

# devtools::install_github('talgalili/dendextend') # dendextend from github

# define dendrogram object to play with:

dend <- iris[,-5] %>% dist %>% hclust %>% as.dendrogram %>% set("labels_to_character") %>% color_branches(k=5)

dend_list <- get_subdendrograms(dend, 5)

# Plotting the result

par(mfrow = c(2,3))

plot(dend, main = "Original dendrogram")

sapply(dend_list, plot)

This can also be used within a heatmap:

# plot a heatmap of only one of the sub dendrograms

par(mfrow = c(1,1))

library(gplots)

sub_dend <- dend_list[[1]] # get the sub dendrogram

# make sure of the size of the dend

nleaves(sub_dend)

length(order.dendrogram(sub_dend))

# get the subset of the data

subset_iris <- as.matrix(iris[order.dendrogram(sub_dend),-5])

# update the dendrogram's internal order so to not cause an error in heatmap.2

order.dendrogram(sub_dend) <- rank(order.dendrogram(sub_dend))

heatmap.2(subset_iris, Rowv = sub_dend, trace = "none", col = viridis::viridis(100))

================= OLDER answer

I think what can be helpful for you are these two functions:

The first one just iterates through all clusters and extracts substructure. It requires:

- the

dendrogram object from which we want to get the subdendrograms

- the clusters labels (e.g. returned by

cutree)

Returns a list of subdendrograms.

extractDendrograms <- function(dendr, clusters){

lapply(unique(clusters), function(clust.id){

getSubDendrogram(dendr, which(clusters==clust.id))

})

}

The second one performs a depth-first search to determine in which subtree the cluster exists and if it matches the full cluster returns it. Here, we use the assumption that all elements of a cluster are in one subtress. It requires:

- the dendrogram object

- positions of the elements in cluster

Returns a subdendrograms corresponding to the cluster of given elements.

getSubDendrogram<-function(dendr, my.clust){

if(all(unlist(dendr) %in% my.clust))

return(dendr)

if(any(unlist(dendr[[1]]) %in% my.clust ))

return(getSubDendrogram(dendr[[1]], my.clust))

else

return(getSubDendrogram(dendr[[2]], my.clust))

}

Using these two functions we can use the variables you have provided in the question and get the following output. (I think the line clusters <- cutree(dnd, k = 6) should be clusters <- cutree(hc, k = 6) )

my.sub.dendrograms <- extractDendrograms(dnd, clusters)

plotting all six elements from the list gives all subdendrograms

EDIT

As suggested in the comment, I add a function that as an input takes a dendrogram dend and the number of subtrees k, but it still uses the previously defined, recursive function getSubDendrogram:

prune_cutree_to_dendlist <- function(dend, k, order_clusters_as_data=FALSE) {

clusters <- cutree(dend, k, order_clusters_as_data)

lapply(unique(clusters), function(clust.id){

getSubDendrogram(dend, which(clusters==clust.id))

})

}

A test case for 5 substructures:

library(dendextend)

dend <- iris[,-5] %>% dist %>% hclust %>% as.dendrogram %>% set("labels_to_character") %>% color_branches(k=5)

subdend.list <- prune_cutree_to_dendlist(dend, 5)

#plotting

par(mfrow = c(2,3))

plot(dend, main = "original dend")

sapply(prunned_dends, plot)

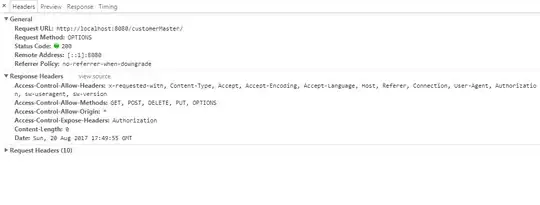

I have performed some benchmark using rbenchmark with the function suggested by Tal Galili (here named prune_cutree_to_dendlist2) and the results are quite promising for the DFS approach from the above:

library(rbenchmark)

benchmark(prune_cutree_to_dendlist(dend, 5),

prune_cutree_to_dendlist2(dend, 5), replications=5)

test replications elapsed relative user.self

1 prune_cutree_to_dendlist(dend, 5) 5 0.02 1 0.020

2 prune_cutree_to_dendlist2(dend, 5) 5 60.82 3041 60.643