I am using the deep neural network toolbox developed by Masayuki Tanaka at following link https://www.mathworks.com/matlabcentral/fileexchange/42853-deep-neural-network.

Now I'm trying to use the deep belief network to do the function approximation. But the result is not very well. The following is my script code. Does anyone could figure out which part can be modified to improve the fitting result?

%% Environment Settings

clear all;

close all;

clc;

rng(42);

addpath DBNLib

%% Define function to be learned and generate test and training data

num_points = 1000;

lower_bound = -2*pi;

upper_bound = 2*pi;

X_all = (upper_bound-lower_bound).*rand(num_points, 1) + lower_bound;

Y_all = cos(X_all) + 0.1*randn(num_points, 1);

Y_all = Y_all;

train_split = 0.8;

train_size = train_split*num_points;

X_train = X_all(1:train_size);

Y_train = Y_all(1:train_size);

X_test = X_all(train_size+1:end);

Y_test = Y_all(train_size+1:end);

% Plot train and test data

scatter(X_train, Y_train, 'g', 'filled'),hold on

scatter(X_test, Y_test, 'r', 'filled'), grid minor

drawnow;

%% Construct deep belif network model

nodes = [1 64 64 1];

dnn = randDBN( nodes , 'GBDBN' );

nrbm = numel(dnn.rbm);

opts.MaxIter = 200;

opts.BatchSize = train_size/8;

opts.Verbose = true;

opts.StepRatio = 0.01;

opts.DropOutRate = 0.5;

opts.Object = 'Squares';

% opts.Layer = nrbm-1;

dnn = pretrainDBN(dnn, X_train, opts);

dnn= SetLinearMapping(dnn, X_train, Y_train);

opts.Layer = 0;

dnn = trainDBN(dnn, X_train, Y_train, opts);

rmse = CalcRmse(dnn, X_train, Y_train);

rmse

estimate = v2h( dnn, X_all);

scatter(X_all, estimate, 'b', 'filled')

legend('train', 'test', 'DBN Fit')

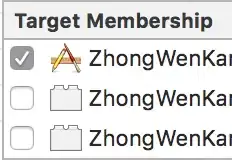

The DBN learning result: