I am trying to run a Spark job on a google dataproc cluster, but get the following error:

Exception in thread "main" java.lang.RuntimeException: java.lang.RuntimeException: class org.apache.hadoop.security.JniBasedUnixGroupsMapping not org.apache.hadoop.security.GroupMappingServiceProvider

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2330)

at org.apache.hadoop.security.Groups.<init>(Groups.java:108)

at org.apache.hadoop.security.Groups.<init>(Groups.java:102)

at org.apache.hadoop.security.Groups.getUserToGroupsMappingService(Groups.java:450)

at org.apache.hadoop.security.UserGroupInformation.initialize(UserGroupInformation.java:310)

at org.apache.hadoop.security.UserGroupInformation.ensureInitialized(UserGroupInformation.java:277)

at org.apache.hadoop.security.UserGroupInformation.loginUserFromSubject(UserGroupInformation.java:833)

at org.apache.hadoop.security.UserGroupInformation.getLoginUser(UserGroupInformation.java:803)

at org.apache.hadoop.security.UserGroupInformation.getCurrentUser(UserGroupInformation.java:676)

at org.apache.spark.util.Utils$$anonfun$getCurrentUserName$1.apply(Utils.scala:2430)

at org.apache.spark.util.Utils$$anonfun$getCurrentUserName$1.apply(Utils.scala:2430)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.util.Utils$.getCurrentUserName(Utils.scala:2430)

at org.apache.spark.SparkContext.<init>(SparkContext.scala:295)

at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:58)

at com.my.package.spark.SparkModule.provideJavaSparkContext(SparkModule.java:59)

at com.my.package.spark.SparkModule$$ModuleAdapter$ProvideJavaSparkContextProvidesAdapter.get(SparkModule$$ModuleAdapter.java:140)

at com.my.package.spark.SparkModule$$ModuleAdapter$ProvideJavaSparkContextProvidesAdapter.get(SparkModule$$ModuleAdapter.java:101)

at dagger.internal.Linker$SingletonBinding.get(Linker.java:364)

at spark.Main$$InjectAdapter.get(Main$$InjectAdapter.java:65)

at spark.Main$$InjectAdapter.get(Main$$InjectAdapter.java:23)

at dagger.ObjectGraph$DaggerObjectGraph.get(ObjectGraph.java:272)

at spark.Main.main(Main.java:45)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:755)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:180)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:205)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:119)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: java.lang.RuntimeException: class org.apache.hadoop.security.JniBasedUnixGroupsMapping not org.apache.hadoop.security.GroupMappingServiceProvider

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2324)

... 31 more

Dataproc versions: 1.1.51 and 1.2.15

Job configuration:

Region: global

Cluster my-cluster

Job type: Spark

Jar files: gs://bucket/jars/spark-job.jar

Main class or jar: spark.Main

Arguments:

Properties:

spark.driver.extraClassPath: /path/to/google-api-client-1.20.0.jar

spark.driver.userClassPathFirst: true

I have no problem running it this way on the command line:

spark-submit --conf "spark.driver.extraClassPath=/path/to/google-api-client-1.20.0.jar" --conf "spark.driver.userClassPathFirst=true" --class spark.Main /path/to/spark-job.jar

But the UI/API does not allow you to pass both the class name and jar, so it looks like this instead:

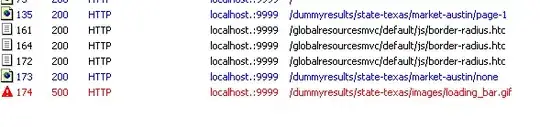

spark-submit --conf spark.driver.extraClassPath=/path/to/google-api-client-1.20.0.jar --conf spark.driver.userClassPathFirst=true --class spark.Main --jars /tmp/1f4d5289-37af-4311-9ccc-5eee34acaf62/spark-job.jar /usr/lib/hadoop/hadoop-common.jar

I can't figure out if it is a problem with providing the extraClassPath or if the spark-job.jar and the hadoop-common.jar are somehow conflicting.