I am running Pentaho Data Integration on an Ubuntu server. I have multiple jobs that run at different times using Jenkins as the orchestrator. I have noticed that sometimes a PDI Job never ends (I get no exit code and the logs freeze in the middle of the process with no exceptions), and when I check the server's memory, it's fully allocated. This raises the following questions:

- Shouldn't Pentaho throw an OutOfMemory Java exception when the server's memory is fully allocated?

- Why is the Pentago biserver-ee being launched if I have no process that runs the server? I only run Jobs using kitchen.sh.

- Why do I always have a persistent Pentaho process running (see process #2 in the image)? It must be the Pentaho process as the Java parameters are the same as my spoon.sh config, but should it be persistent if all the jobs finished?

- Does Spoon/kettle/PDI/Pentago starts a persistent process to allocate the memory specified with the Xms parameter?

- Why is my Pentaho persistent process using 1 core at it's 100% all the time?

It makes no sense as there is nothing currently running. I want to know how I can identify the issues as the logs stop printing the results, so I have no clue on where to start to solve this problem.

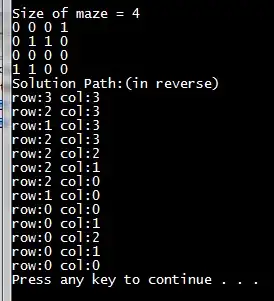

I attach an image of the three processes that are consuming memory on my server (Jenkins, Pentaho BI Server and Spoon) and the specs of my server, Java and Pentaho setup.

Server Specs (It's a Virtual Machine created using VmWare):

- OS: Ubuntu 14.04.4

- RAM: 12GB

- Cores: 4

My Java version is "1.8.0_101"

I changed the memory parameters in spoon.sh as follows:

- Xms: 1024m

- Xmx: 7GB

- XX:MaxPermSize=2GB