I would use the following approach to try and find the four lines provided in the question.

1. Read the image, and convert it into grayscale

import cv2

import numpy as np

rgb_img = cv2.imread('pipe.jpg')

height, width = gray_img.shape

gray_img = cv2.cvtColor(rgb_img, cv2.COLOR_BGR2GRAY)

2. Add some white padding to the top of the image ( Just to have some extra background )

white_padding = np.zeros((50, width, 3))

white_padding[:, :] = [255, 255, 255]

rgb_img = np.row_stack((white_padding, rgb_img))

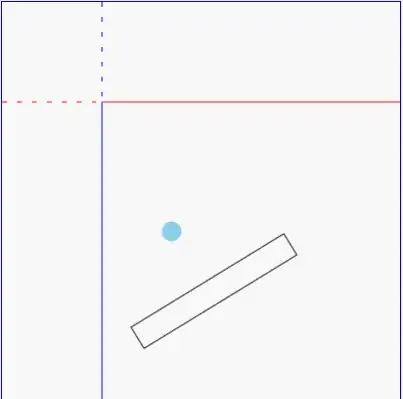

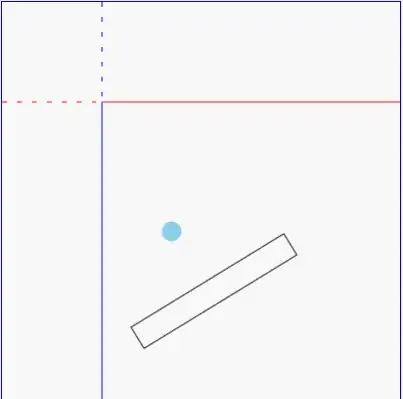

Resultant image -  3. Invert the gray scale image and apply black padding to the top

3. Invert the gray scale image and apply black padding to the top

gray_img = 255 - gray_img

gray_img[gray_img > 100] = 255

gray_img[gray_img <= 100] = 0

black_padding = np.zeros((50, width))

gray_img = np.row_stack((black_padding, gray_img))

4.Use Morphological closing to fill the holes in the image -

kernel = np.ones((30, 30), np.uint8)

closing = cv2.morphologyEx(gray_img, cv2.MORPH_CLOSE, kernel)

5. Find edges in the image using Canny edge detection -

5. Find edges in the image using Canny edge detection -

edges = cv2.Canny(closing, 100, 200)

6. Now, we can use openCV's

6. Now, we can use openCV's HoughLinesP function to find lines in the given image -

minLineLength = 500

maxLineGap = 10

lines = cv2.HoughLinesP(edges, 1, np.pi / 180, 50, None, 50, 100)

all_lines = lines[0]

for x1,y1,x2,y2 in lines[0]:

cv2.line(rgb_img,(x1,y1),(x2,y2),(0,0,255),2)

7.Now, we have to find the two rightmost horizontal lines, and the two bottommost vertical lines. For the horizontal lines, we will sort the lines using both (x2, x1), in descending order. The first line in this sorted list will be the rightmost vertical line. Skipping that, if we take the next two lines, they will be the rightmost horizontal lines.

7.Now, we have to find the two rightmost horizontal lines, and the two bottommost vertical lines. For the horizontal lines, we will sort the lines using both (x2, x1), in descending order. The first line in this sorted list will be the rightmost vertical line. Skipping that, if we take the next two lines, they will be the rightmost horizontal lines.

all_lines_x_sorted = sorted(all_lines, key=lambda k: (-k[2], -k[0]))

for x1,y1,x2,y2 in all_lines_x_sorted[1:3]:

cv2.line(rgb_img,(x1,y1),(x2,y2),(0,0,255),2)

8. Similarly, the lines can be sorted using the y1 coordinate, in descending order, and the first two lines in the sorted list will be the bottommost vertical lines.

8. Similarly, the lines can be sorted using the y1 coordinate, in descending order, and the first two lines in the sorted list will be the bottommost vertical lines.

all_lines_y_sorted = sorted(all_lines, key=lambda k: (-k[1]))

for x1,y1,x2,y2 in all_lines_y_sorted[:2]:

cv2.line(rgb_img,(x1,y1),(x2,y2),(0,0,255),2)

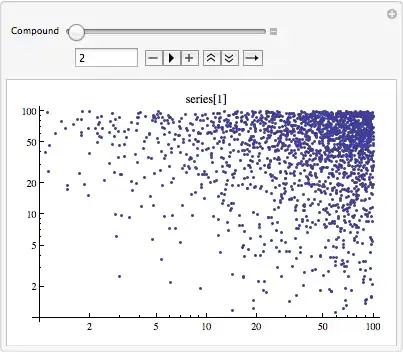

9. Image with both lines -

9. Image with both lines -

final_lines = all_lines_x_sorted[1:3] + all_lines_y_sorted[:2]

Thus, obtaining these 4 lines can help you finish the rest of your task.